Today, I’m going to discuss intermediate superintelligence: the point at which AI begins to modestly exceed human capability across almost all economically meaningful tasks1.

Why explore superintelligence, when current AI systems still struggle with fundamentals such as memory, complex reasoning, and hallucinations? Well, in order to prepare for the future, we need some idea of the timeline on which AI will develop. In order to estimate that timeline, we need some idea of how much contribution AI will make toward its own development. And that, in turn, depends on the relationship between intelligence and productivity. What happens when AIs are almost as smart as people? Just as smart? Smarter?

Superintelligence is the idea that the intelligence spectrum does not top out at the level of human beings, and we should expect AIs to develop capabilities surpassing anything we’ve seen from people. (In Get Ready For AI To Outdo Us At Everything, I presented some arguments that this will indeed happen at some point.) Discussions of superintelligence tend to focus on the endgame: ultra-sophisticated systems that are to humans as humans are to mice. I believe that it’s also important to consider the intermediate period, where AIs are just beginning to outstrip us at open-ended tasks, especially AI research. Two arguments for focusing on this intermediate period:

The deadline for us to safely manage systems that are smarter than us will be the date at which we begin to achieve intermediate superintelligence.

Much of the critical work for ensuring safety will likely take place during this period. That is where we’ll be learning to use general-purpose AIs to help with AI safety, getting practice with alignment techniques when it really matters, and experiencing “wake-up calls” (instances of AI misbehavior that are serious enough to motivate action, but hopefully not so serious as to be unrecoverable) with the potential to spur global action.

How Does Intelligence Affect Productivity?

In any profession, some folks accomplish more than others; in software engineering, this is the origin of the term “10x developer”. Individual variations in productivity are the result of many factors, such as focus, interpersonal skills, opportunity, and experience with the specific type of work to be done. However, raw intelligence, or talent, plays an important role.

Here’s a model for thinking about the relationship of intelligence to productivity. Any complex task needs to be broken down into subtasks, which are broken down further, until eventually you arrive at tasks that are simple enough to carry out in a single intuitive step2.

There are usually multiple ways of carrying out each step. If you are given a task that is easy for you, you will likely accomplish it quickly, and produce a good result. If given a difficult task, you may need multiple attempts, and the final result might not be so good. If the task is sufficiently difficult, you might be unable to accomplish it at all.

For complex tasks, these variations are compounded. A programmer lacking in talent or experience might come up with an overly complex plan for breaking down a project. Then they might struggle to accomplish each subtask. If their plan includes twice as many steps, and they take twice as long to accomplish each step, then the overall project will take four times as long.

By similar reasoning, if their system design produces a program with twice as many components, and their implementation of each component uses twice as much processing time, the resulting program may take four times as long to execute. Other aspects of quality may also suffer.

In short, a talented worker might be able to find a shorter path, travel at higher speed, locate a better destination, or all of the above. And for a large-scale project, encompassing many people over the course of years, the effects will compound over much more than two levels. This helps explain why large projects at dysfunctional organizations can go years behind schedule, and a single talented individual can sometimes outperform a team of mediocrities. It may also help to explain why GPT-3 is able to easily accomplish tasks that were completely beyond GPT-2, and similarly for GPT-4 vs. GPT-3: an incremental increase in ability can move a task from “unachievable” to “straightforward”.

Example: The Fly And the Bicycles

As an example of the compounding nature of skill, consider the following classic math puzzle3:

Two bicyclists are 20 miles apart and head toward each other at 10 miles per hour each. At the same time a fly traveling at a steady 15 miles per hour starts from the front wheel of the northbound bicycle. It lands on the front wheel of the southbound bicycle, and then instantly turns around and flies back, and after next landing instantly flies north again. Question: what total distance did the fly cover before it was crushed between the two front wheels?

There are at least three different ways of approaching this question4. The first approach requires multiple steps (no need to actually read them):

The fly and oncoming bicycle start out 20 miles apart, and are converging at a combined 25 miles per hour. The fly is traveling at 15 mph, so it will fly 15/25th of the distance – i.e. 20 * 15/25, or 12 miles – before meeting the bicycle.

At this point, each bicycle has gone 8 miles (20 * 10/25), so the two bicycles combined have traveled 16 miles. They are now 4 miles apart.

The new 4-mile separation is 1/5th of the original separation, so the fly’s next leg will be 1/5th as long as the 12 mile initial trip.

Each subsequent leg will be 1/5th as long as the preceding leg. In total, the distance traveled by the fly will be 12 * (1 + 1/5 + 1/5² + 1/5³ + …).

To work this out, we need to compute the sum of the infinite series. A standard formula tells us that for any x greater than 1, the series 1, 1/x, 1/x², … sums to x / x-1. In our case, x=5, so the series sums to 5/4, and the fly travels 12 * 5/4 = 15 miles.

Here’s a second solution:

The bicycles are approaching one another at a combined 20 miles per hour.

Since they start out 20 miles apart, they will take one hour to meet.

The fly travels 15 miles per hour, so in one hour, it will travel 15 miles.

And a third:

The bicycles are approaching one another at a combined 20 miles per hour.

The fly travels at 15 miles per hour, so it will travel 15/20th as far as the bicycles, or 15 miles.

The time it takes for you to solve the puzzle will depend on which plan you choose. If you are clever, or experienced with this sort of puzzle, you may hit on one of the short solutions. Otherwise, you might try to just grind out the scenario, leading you to the first approach.

Once you have a plan, your solution time will also depend on the time you need to carry out each step. For instance, step 5 of the first plan relies on the formula for summing a geometric series. If you don’t know that formula, you’ll have to work it out, which will take quite a bit longer. If you don’t have the right sort of math experience, you might not be able to work it out at all. So the possibilities for this simple-looking puzzle range from two easy steps, to five steps of which one may be quite difficult, to complete failure.

There’s a well-known story about this puzzle being posed to the famously brilliant mathematician John von Neumann. Supposedly, he immediately replied with the correct answer, leading to the following exchange5:

“Oh, you’ve heard the trick before”, said the disappointed questioner. “What trick?” asked the puzzled Johnny. “I simply summed the infinite series”.

I suppose that if you’re von Neumann, you can sprint through the complicated 5-step solution in the time anyone else would need for two steps. I’m not sure what this says about my theory of the compounding effects of talent.

Complex Projects Are Affected Most

The impact of intelligence depends on the complexity, difficulty, and novelty of the project:

A complex project translates into multiple layers of planning and execution, each of which introduces compounding opportunities for better or worse results.

If a task is difficult, some workers may not be able to complete it at all. Others will be stretched to the edge of their ability, struggling to find a single solution. A more talented individual might find several solutions, and thus have the luxury of choosing the best one.

For novel problems, it’s not possible to fall back on experience, nor to rely on solutions others have found in the past. (This is especially visible with current LLMs, which rely almost entirely on reproducing patterns from their training data. For instance, the new crop of programming assistants are mostly useful for producing minor adaptations of well-known coding patterns.)

Thus, if we manage to create superintelligent AIs, we might expect them to have the greatest impact on complex, difficult, novel problems, such as those found at the frontier of science and engineering – notably including AI research.

Actually, developing new AI models requires many different sorts of work6. Designing and refining neural net architectures, gathering data sets for training and validation, implementing bits of software, evaluating the results… certainly there is ample scope for creative leaps, but also a lot of routine work. If we had a superintelligent AI, I don’t know whether its productivity advantage on AI development would be more like a factor of three, or a factor of a thousand.

In the factor-of-three scenario, progress is mostly tied to a steady succession of small successes, where you need to put in a lot of straightforward work before you have the information needed to support the next breakthrough.

In the other scenario, we lowly humans are routinely failing to spot breakthrough opportunities – opportunities that could have been identified using the data available – and so a team of supergenius AIs could be skipping ahead on a much more efficient path, squeezing more progress out of each training experiment.

I suspect that we should be prepared for either scenario.

Is There A Ceiling On Intelligence?

How much room is there for AIs to surpass human ability?

When it comes to multiplying large numbers, computers beat us by an astronomical factor.

For chess, the best computer will beat the best human pretty much every time. But the gap is not nearly so large as for multiplication. Computers, given vast amounts of time to analyze the opening game, wind up selecting mostly the same openings that people have been using for decades. If a computer had to give odds of a queen, it could not beat the human champion. Computers have a big advantage, but it’s not a space-laser-vs-wooden-club advantage. Maybe more like an assault rifle vs. a musket; you could lose if you weren’t taking the competition seriously.

When it comes to software engineering, or writing a novel, computers can’t yet hold a candle to a competent human. They can take a stab at small works, but they can’t touch anything large enough to be nontrivial, nor can they handle novel problems7.

It seems to me that the more open-ended and complex the problem domain, the more difficult it is for computers to tackle, and the smaller their advantage if and when they do exceed human ability. Is this a temporary phenomenon, reflecting the intermediate state of our progress toward sophisticated AI? Or is there a fundamental complexity principle at work? Perhaps, for complex problems, there’s a point of diminishing returns to intelligence: a combinatorial explosion makes it harder and harder to exceed a certain level of ability, and humans are already approaching that level, meaning that there’s not much room for AIs to outclass us. I recently talked about how a critical component of intelligence is selecting, from the vast amount of information in your long-term memory, the right facts to focus on at any given moment. Perhaps this becomes exponentially more difficult as you climb the intelligence scale.

Or perhaps an AI that’s tuned for software engineering will be able to leave our hunter-gatherer brains in the dust. I think it’s too soon to tell.

Intelligence Isn’t Always the Limiting Factor

It’s not always possible to accelerate a project by working smarter – nor by working harder, for that matter. An assembly line worker can’t move any faster than the assembly line. A customer support agent can only converse as quickly as the customer they’re supporting. A crane operator needs a substantial level of skill, but their productivity will be capped by the performance of the crane.

For AI research, one major constraint is that it simply takes a lot of time and money – in the form of computing hardware – to train a cutting-edge model. A smarter researcher would presumably be able to squeeze more progress out of each training experiment, but hardware is still a limiting factor, to the point that I’ve read that companies with a high GPU–to-person ratio have an easier time recruiting AI researchers.

What Can We Learn From Human Geniuses?

History is full of individuals who made enormous contributions to progress. With advanced AI, will we be able to reproduce this phenomenon? Will we be able to mass produce it?

I see two questions here. First, to what extent are standout accomplishments the result of talent, versus circumstance? Second, if we could instantiate a million genius AIs, is there room for them all to have genius-level impact on a single project? Or would there be diminishing returns?

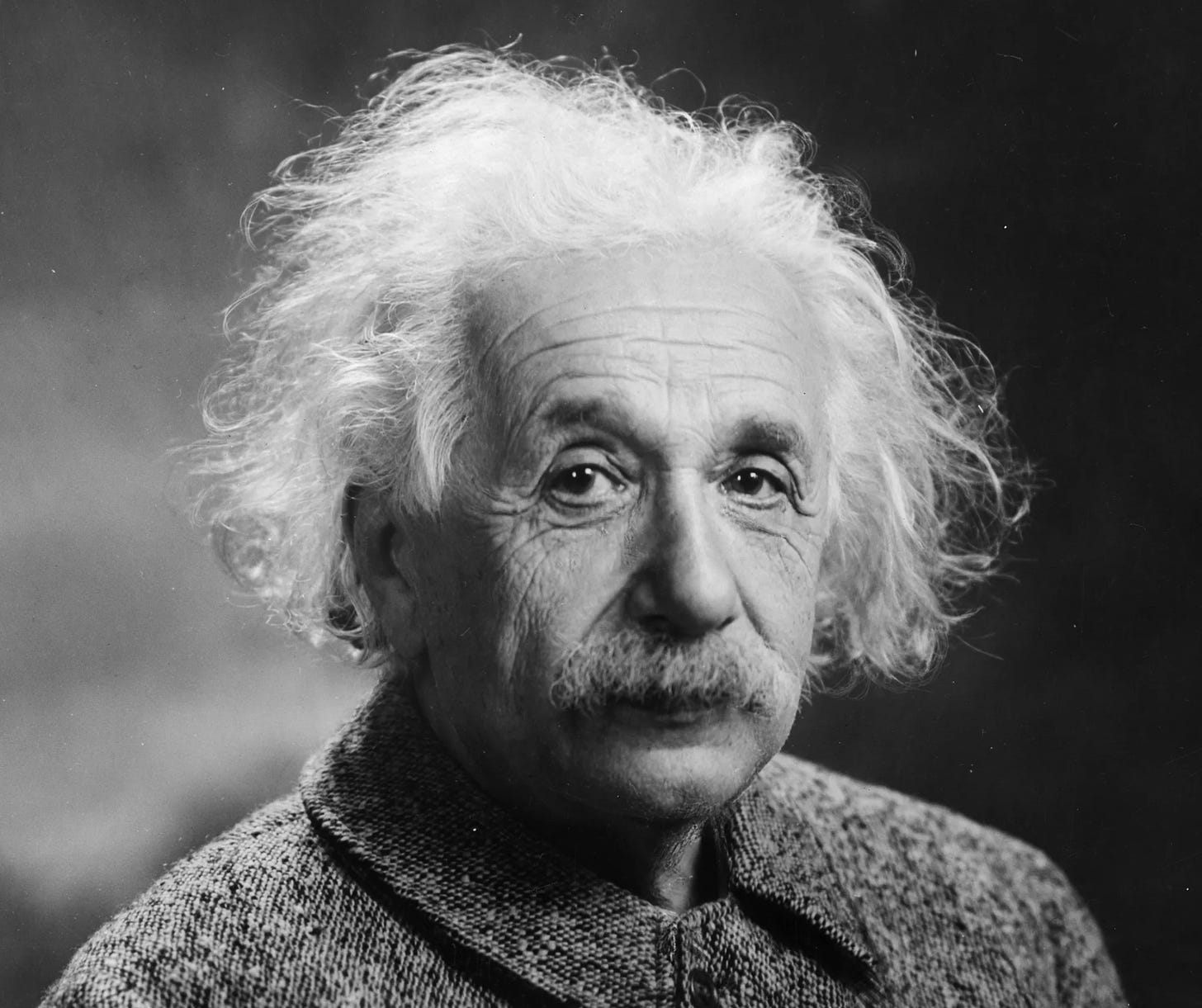

Regarding the first question, my friend Tony supplied a couple of nice examples that support the “talent” hypothesis: Darwin’s theory of evolution, and Einstein’s theory of general relativity. Darwin’s work was famously inspired by observations made during his time in the Galapagos Islands, but the Earth is entirely populated by the results of natural selection, and there must have been many opportunities throughout history for someone else to connect the dots. As for Einstein, apparently the general opinion among physicists is that there was enough experimental evidence relating to special relativity that someone else would eventually have hit upon it – though they might not have come up with a formulation as elegant as Einstein’s. But general relativity was not required to explain the observations available at the time, and the feeling is that if Einstein had not made an intuitive leap, general relativity might not have been discovered until much later.

It’s also worth noting that both Darwin and Einstein made many other significant contributions. For instance, over a short period in 1905, Einstein wrote a series of papers which contained not only the special theory of relativity, but also the equivalence of mass and energy (the famous E=mc²), and explanations of both Brownian motion and the photoelectric effect (marking the beginning of quantum physics). It’s clear that something special was going on with that guy, whether it was intelligence, creativity, or a mixture of the two. Perhaps there’s not much difference; creativity seems to have something to do with pulling together the right pieces of information at the right time, which seems closely related to intelligence.

That leads us to the second question. Suppose that, using historical examples like Darwin and Einstein as an existence proof, we develop an AI that is similarly gifted. What would be the impact of letting a million copies of that AI team up on a single project?

I am sure that multiple Einsteins is better than one Einstein, but eventually there would be diminishing returns; they would inevitably spend much of their time doing routine work, or waiting for experimental results. This comes back to the earlier observation that intelligence is not always the limiting factor for progress. It’s hard to say exactly how much difference a million Einsteins would make; perhaps the impact would be transformative, perhaps merely significant.

AI Advantages Won’t Just Be About Intelligence

We can expect AIs to have a number of productivity advantages unrelated to intelligence. We should be able to train them to be relentlessly focused and persistent. They won’t get tired, sick, or have personal or family issues. If we’re able to align them properly, they won’t play politics, cover up problems, or otherwise put their own interests ahead of the goal.

AIs will have more options for communicating with one another than humans do. Sure, we’ll probably give them the equivalent of chat, email, and an internal wiki. But we may also find ways for them to communicate through high-bandwidth neural channels, or even share memories directly. They’ll also be able to integrate with other software tools, from calculators to databases.

AI teams won’t ever bottleneck on a particular team member. Sometimes there’s one person who has a particular expertise, or deep knowledge of some aspect of the project, and that person becomes a limiting factor. On a team of AIs, you could simply clone the relevant AI instance, by making a copy of the memories that make it uniquely valuable.

Rolled together, these factors might make AIs several times more productive than people, even assuming equivalent intelligence and experience.

Summing Up: What Might Intermediate Superintelligence Look Like?

AI intelligence may eventually reach heights which lie beyond a singularity, where we simply cannot predict the implications. However, there will be an important transition period of intermediate superintelligence, where we can at least try to make predictions. This transition will also be a critical make-or-break period for addressing AI risks.

We should expect a fairly steep relationship between an AI’s ability level and its performance on a given task. If a task is too difficult, the AI will come up with a complex plan, and then struggle to carry out that plan at all; a more powerful AI might come up with an elegant plan which it can execute quickly. Thus, as we’ve already seen with GPT-3 and GPT-4, AI may go from incompetent to highly competent in a single generation.

The transition to competence is determined by the AI’s ability level relative to the difficulty of the task in question. As a result, we should be able to see AI competence climbing a ladder of engineering tasks; AI won’t conquer all aspects of its own development, let alone all human capabilities, in a single generation. Complex, novel tasks that require multiple levels of planning may be the last to become feasible, and exhibit the largest impact when they do.

AI research entails a mix of shallow and deep problems, and is often limited by external factors, such as the availability of training data or the need for large GPU clusters to perform experiments. This will dilute the ability of AIs to accelerate their own development. Furthermore, we might find that further progress in AI yields diminishing gains of intelligence, where a combinatorial explosion of complexity makes it increasingly expensive to push very far past the ability of a talented human.

The upshot is that I expect the period of intermediate superintelligence to be messy, playing out over a period of years (if not decades), and not necessarily ending in an explosive transition to transcendent AGI. During this intermediate period, we should have the opportunity to practice managing AIs that are (at least in some important ways) smarter than we are, without yet being utterly beyond us.

Thanks to Tony Asdourian and Toby Schachman for suggestions and feedback.

More precisely, I refer to ability at complex, open-ended, real-world tasks, such as designing better AIs. This is quite different than the self-contained tasks that LLMs can tackle today. See I’m a Senior Software Engineer. What Will It Take For An AI To Do My Job?.

In the terminology of Thinking Fast and Slow, the smallest subtasks are those that can be handled by a single iteration of System 1.

Here I am borrowing from https://thatsmaths.com/2018/08/23/the-flight-of-the-bumble-bee/.

Here I almost get to use “ibid” for I believe the first time in my life, but sadly there is an intervening footnote. So I’ll have to spell it out: this quote is from the aforementioned book on John von Neumann. I confess that I briefly considered rearranging the discussion to make “ibid” work.

In a recent interview, one of the authors of a recent Google paper on a new AI system specialized for medical tasks notes that the paper has 50 authors, of whom just 8-10 are ML experts.

Aside from certain sorts of “do X with constraint Y” party tricks, but I think people are already getting tired of reading an epic poem about bacon as composed by Homer Simpson, or whatever.