I Don't See How Comparative Advantage Applies In a World of Strong AI

Economic models are based on simplifying assumptions that may not hold in an AI-saturated future

EDIT: I’ve written a more carefully argued, but very terse, version of these ideas here. What follows below is much more accessible; the link is a better and more thorough argument. I should also note that, while the purpose of this piece is to rebut one of Noah Smith’s posts, I am a paying subscriber to his substack and enjoy it thoroughly.

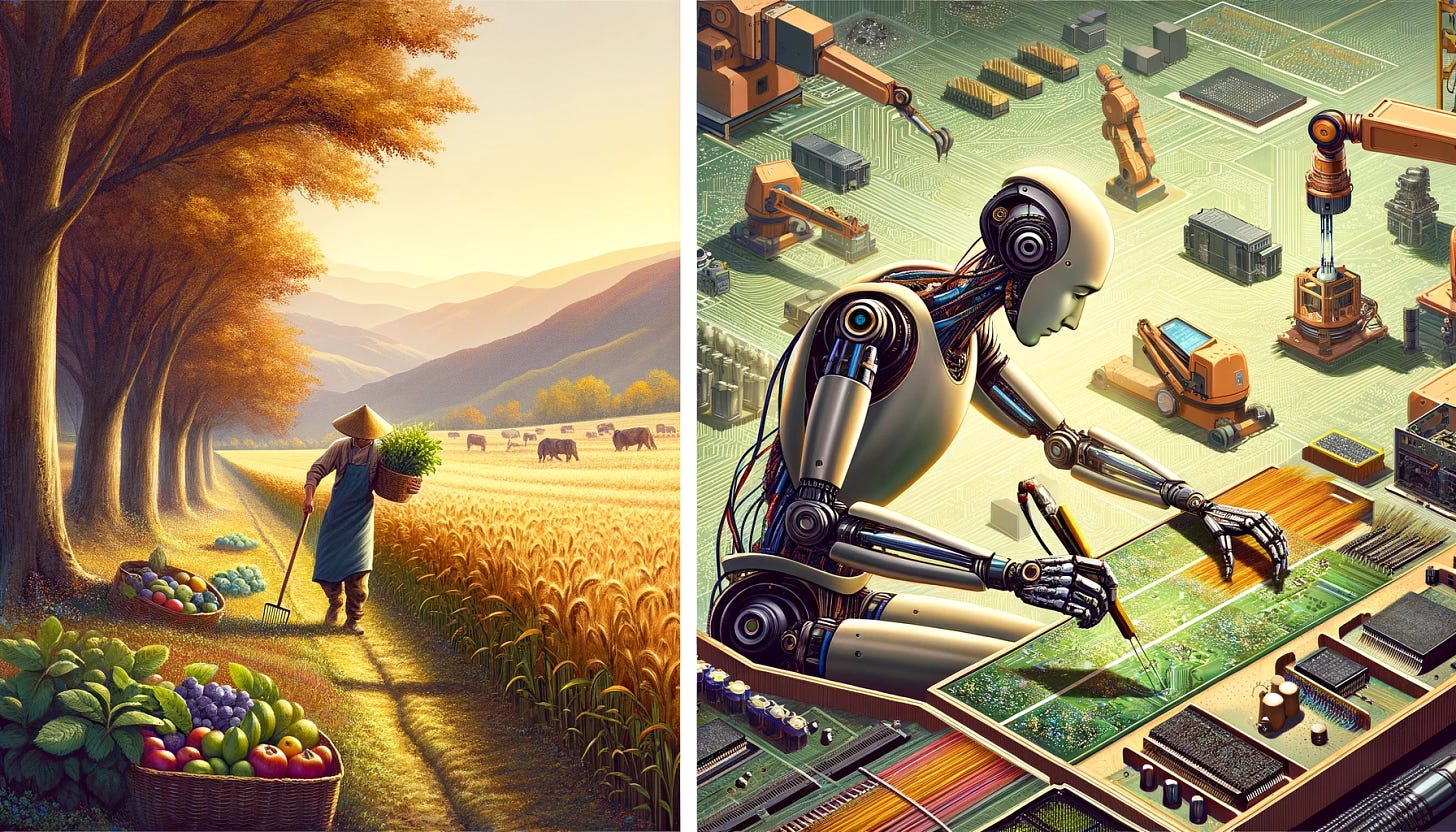

If you follow discussions of AI, you’ve likely encountered the idea that we don’t need to worry about AI taking people’s jobs: no matter how advanced AI might become, people will always find work. Progress has eliminated most jobs in categories from agriculture to telephone switchboard operation, but new jobs have always emerged. AI, some folks argue, will be no different than previous technological revolutions in this regard.

In his latest post, Plentiful, high-paying jobs in the age of AI, Noah Smith clearly lays out the primary economic argument. Which is a nice opportunity for me to explain why I think it’s bunk.

I believe that Smith is too wrapped up in the mathematical beauty of the concept of comparative advantage to realize that, like most models, it relies on simplifying assumptions that break down when you move too far away from the situation in which the model was originally developed.

The Comparative Advantage Argument

Smith makes a strong version of the continued-employment claim:

I accept that AI may someday get better than humans at every conceivable task. That’s the future I’m imagining. And in that future, I think it’s possible — perhaps even likely — that the vast majority of humans will have good-paying jobs, and that many of those jobs will look pretty similar to the jobs of 2024.

Smith’s argument is based on a principle of economics called “comparative advantage”, which he explains as follows:

Comparative advantage … means “who can do a thing better relative to the other things they can do”. So for example, suppose I’m worse than everyone at everything, but I’m a little less bad at drawing portraits than I am at anything else. I don’t have any competitive advantages at all, but drawing portraits is my comparative advantage.

He then notes that, by definition, everyone “always has a comparative advantage at something”, and they are likely to wind up employed doing that thing, even if there are lots of other people who could do it better:

Somewhere in the developed world, there is probably some worker who is worse than you are at every single possible job skill. And yet that worker still has a job. And since they’re in the developed world, that worker more than likely earns a decent living doing that job, even though you could do their job better than they could.

He explains that this is because of “producer-specific constraints”: to get a job, you don’t need to be the best in the world at that job; you just need to be the best person available (at the salary you’re willing to accept).

To be clear, I am not criticizing any of the above. Comparative advantage is a useful concept, and Smith explains it clearly. The problems creep in when he extrapolates out to a world where AIs are better than humans at everything. He notes that there will likely always be some constraint – say, chip manufacturing – on the total number of AIs. And so all of the available AIs will be busy doing valuable work, leaving room for the poor incompetent humans to find jobs as well:

…because of comparative advantage, it’s possible that many of the jobs that humans do today will continue to be done by humans indefinitely, no matter how much better AIs are at those jobs. And it’s possible that humans will continue to be well-compensated for doing those same jobs.

Some Obvious Holes

It took me like two minutes to come up with some obvious holes in this idea:

Lower quality work. In the world we’re talking about, AIs are likely “better” than humans not only in being able to do things faster, but also at higher quality and more reliably. I’m not likely to want to be operated on by a human surgeon, when an AI surgeon would have a higher success rate. We’re unlikely to put a person behind the wheel of a delivery van, if AIs have become much safer drivers. In general, we’re unlikely to put the inputs for any given job into the hands of a human, when an AI can give us a better product from those same inputs.

Transactional overhead. For many jobs, an AI will be able to get the job done in less time than it would take me merely to explain what I want to a person. Not to mention all of the overhead of finding the right person, agreeing on a price, and so forth.

Adaptation. Even if there are some jobs at which a human could find gainful employment, the nature of those jobs might change too quickly for people to keep up. (Smith notes this, but somehow doesn’t allow it to influence his primary conclusion.) In a world in which we have universal AI and it’s so valuable that we’re building more AIs as fast as we possibly can, rapid change seems likely.

As I noted earlier, these are all poking at hidden assumptions of the “comparative advantage model” – assumptions that have been fairly safe in the past, but will break down in a world that is awash in hyper-competent AI.

The Problem of Limited Resources

Smith notes that economic theory does provide for a few mechanisms by which full employment could break down. In particular, if humans are competing with AIs for some scarce resource, such as energy:

It’s possible that AI will grow so valuable that its owners bid up the price of energy astronomically — so high that humans can’t afford fuel, electricity, manufactured goods, or even food. At that point, humans would indeed be immiserated en masse.

He goes on to note that this probably wouldn’t be allowed:

Of course, if human lives are at stake rather than equine ones, most governments seem likely to limit AI’s ability to hog energy. this could be done by limiting AI’s resource usage, or simply by taxing AI owners. The dystopian outcome where a few people own everything and everyone else dies is always fun to trot out in Econ 101 classes, but in reality, societies seem not to allow this.

Note that the argument is no longer “comparative advantage provides a mathematical proof that people will always find work”. It is now “obviously we’re not going to allow everyone to starve in the streets”. Hopefully that’s true; but it’s a very different argument! Basically, to accept that economics guarantees people will always have jobs, you have to assume that none of the many material inputs that we need to lead comfortable lives (energy, living space, all of the inputs needed to produce our food, etc.) could be more efficiently used by AIs. Otherwise, economics is out the window, and we’re falling back on politics.

Basically Nothing About This Is Reassuring

The idea that we needn’t fear being put out of work by AI comes up frequently. I would love to feel reassured, but I just can’t get there.

(Remember that all of this is predicated on the assumption that AIs eventually become “better than humans at every conceivable task”. When, or if, that might happen is a separate question.)

First of all, the primary argument put forth to support the idea of continued employment – that people will adapt into their new comparative advantage – seems to ignore limits on adaptation; the fact that humans will likely make less efficient use of valuable inputs; and transaction costs. Not to mention the acknowledged issue that from a strict economic viewpoint, it may not be worth the resources needed to keep people alive – and so we will need to fall back on non-economic mechanisms to justify our existence.

Second of all, a world with advanced AI is going to be very different from the present day, and it seems quite hubristic to make confident proclamations about that world based on past experience.

Finally, even if comparative advantage holds, there’s something deeply unsettling about a world in which we are a near-irrelevant detail, even if that world is so wealthy that we’re still not quite worth discarding.

If anyone reading this is connected with Noah Smith, I’d love to get his take. Judging by the volume of comments on his post, he may not be interested in reading yet another rebuttal. But I don’t see anyone bringing up the points I make here, such as the problem that most jobs involve some sort of valuable input, and AIs may be able to produce better outputs from those inputs. More fundamentally, I don’t see anyone pointing out the danger in blindly extrapolating simple principles of present-day economics into a hypothetical world that would look very different from today.

"It took me like two minutes to come up with some obvious holes in this idea:"

I'm not sure these are holes at least the way Smith argues it.

"Lower quality work": Yes, humans will be do lower quality work, but will also be much cheaper. Sure, if you want AIs that are better, you can pay for them, but the lost opportunity cost makes them prohibitively expensive, as I will expand.

"Transactional overhead." Yes, AIs are better here but the cost puts them out of reach.

Why are AIs so expensive? The comparative advantage argument finds that all the available AIs are doing R & D work in fusion, quantum-computing, neural linking, curing disease, climate control, etc., and to pull them away from that requires paying their proprietors enormous amounts of money equivalent to the lost opportunity cost that cheaper energy and compute would mean to a future economy-- $trillions? AIs become astronomically expensive exactly because they're so smart.

So the likely scenario seems to be that an energy and compute budget is mandated by law for humans at a level just below super-intelligence -- so plenty of energy and compute for everything we need. This doesn't constrain the AIs because they are constantly discovering new energy sources and building faster compute, so what's left to humans shrinks as a percentage of the total year by year.

"Adaptation": Yes, this is a challenge, but also Smith grants from the beginning that continuous diversification is a historical given. There will be farmers and horse trainers out of work as an economy changes. But it is not possible for everyone to be out of work because there is always something that humans can do to free up an AI for more important work.

But the more important counter to adaption problems is that the AI world is fabulously wealthy with cheap energy and compute and there is no need to work, the basics of life are free and people who don't want to work won't need to.

However, like you, I have similar concerns about humans being irrelevant. Very rapidly in the scenario above (only a few decades?), we are no longer in control of AI, and AI is effortlessly in charge. Smith seems to recognize that his scenario only takes us to that point. Once AI calls the shots, its anyone's guess what happens next.

"If anyone reading this is connected with Noah Smith, I’d love to get his take."

Uh, you saw his response to Zvi. Your post isn't even close to the level of deference and humility required for a Smith response! (smile)

You have written “it’s impossible to grasp the sheer number of edge cases until you’re operating in the real world.” Won’t edge cases be enough to keep us humans occupied?