The AI Explosion Might Never Happen

As AI Learns To Improve Itself, Improvements Will Become Harder To Find

As AIs become increasingly capable, they will acquire the ability to assist in their own development.

Let’s presume that we eventually manage to create an AI that is slightly better than us at AI design. Since that system is better at AI design than its human creators, it should be able to design an AI better than itself. That second system should then be able to design its own improved successor, and so forth.

This process, known as recursive self-improvement, is central to many scenarios for the future of AI. Some observers believe that once the process begins, it will lead to a rapid upward spiral in AI capabilities, perhaps culminating in a technological singularity. As Eliezer Yudkowsky put it way back in 20081:

I think that, at some point in the development of Artificial Intelligence, we are likely to see a fast, local increase in capability—“AI go FOOM.” Just to be clear on the claim, “fast” means on a timescale of weeks or hours rather than years or decades; and “FOOM” means way the hell smarter than anything else around, capable of delivering in short time periods technological advancements that would take humans decades, probably including full-scale molecular nanotechnology. (FOOM, 235)

A lot of people seem to believe that, once AIs approach human capability, this sort of “foom” loop is more or less inevitable. But the math doesn’t actually say that. We may see periods where AI progress accelerates, but the feedback loop probably won’t be explosive, and could easily peter out. In today’s post, I’ll explore some of the factors at play, and early indicators we can watch for to indicate when we might be entering a period of rapid self-improvement.

A Parable

Once upon a time, there was a manufacturing company.

To begin with, it was a small, locally owned business. However, the owner had ambitions to create something larger. He used a small inheritance to hire several engineers to research improved manufacturing techniques.

Armed with these improved techniques, the company was able to produce their products faster, at a lower price. This led to an increase in both sales and profits.

The owner plowed those increased profits back into R&D, yielding further efficiency improvements. As the company’s manufacturing prowess continued to improve, the competition fell farther behind. Soon they were able to hire their pick of each year’s graduating class of engineering talent; no one else could pay as well, and besides, all of the best work was being done there.

Every year brought higher profits, more R&D, improved efficiency, increased sales, in an unending feedback loop. Eventually the company was manufacturing everything on Earth; no other firm could compete. Fortunately, there was no need to stop at mere world domination; such was the company’s prowess that they could easily manufacture the rockets to carry people to other planets2, creating new markets that in turn allowed R&D to continue, and even accelerate. Within a half century of its founding, the company had developed molecular nanotechnology, interplanetary colonies, and was even making progress on lower back pain. All because of the original decision to invest profits into R&D.

Yes, It’s a Silly Story, That’s the Point

In general, increased R&D spending should yield increased profits, which in turn should allow further R&D spending. But no company has ever managed to parlay this into world domination. Even titans like Apple or Google bump up against the limits of their respective market segments.

There are of course many reasons for this, but it all boils down to the fact that while each dollar of increased R&D should return some amount of increased profit, it might return less than a dollar. Suppose you spend $10,000,000 per year manufacturing widgets, you spend $5,000,000 on a more efficient process, and the new process is 3% more efficient. That efficiency saves you $300,000 per year. It will take over 16 years to pay back the R&D cost; after accounting for time-value-of-money and other factors, you probably didn’t come out ahead, and you certainly won’t be in a position to fund an accelerating feedback loop.

Positive Feedback Eventually Reaches a Limit

We see diminishing feedback all the time in the real world:

Teachers are taught by other teachers, but we don’t see a runaway spiral of educational brilliance.

Chip design relies heavily on software tools, which are computationally demanding. Better chips can run these tools more efficiently, yet we haven't experienced an uncontrolled rate of improvement.

In biological evolution, a species' fitness directly correlates to its population size, thereby creating more room for beneficial mutations. Moreover, the very mechanisms that drive evolution – such as mutation rates and mate selection – are themselves subject to evolutionary improvement. However, this has not led to a biological singularity.

Feedback loops often do lead to spiraling growth, for a while. Smartphone sales and capabilities both grew explosively from 2010 through around 2015, until the market became saturated. An invasive species may spread like wildfire… until it covers the entire available territory. Every feedback loop eventually encounters a limiting factor, at which point diminishing returns set in. Teaching ability is limited by human intelligence. Chip capabilities are limited by the manufacturing process. AI may turn out to be limited by computing hardware, training data, a progressively increasing difficulty in finding further improvements, or some sort of complexity limit on intelligence.

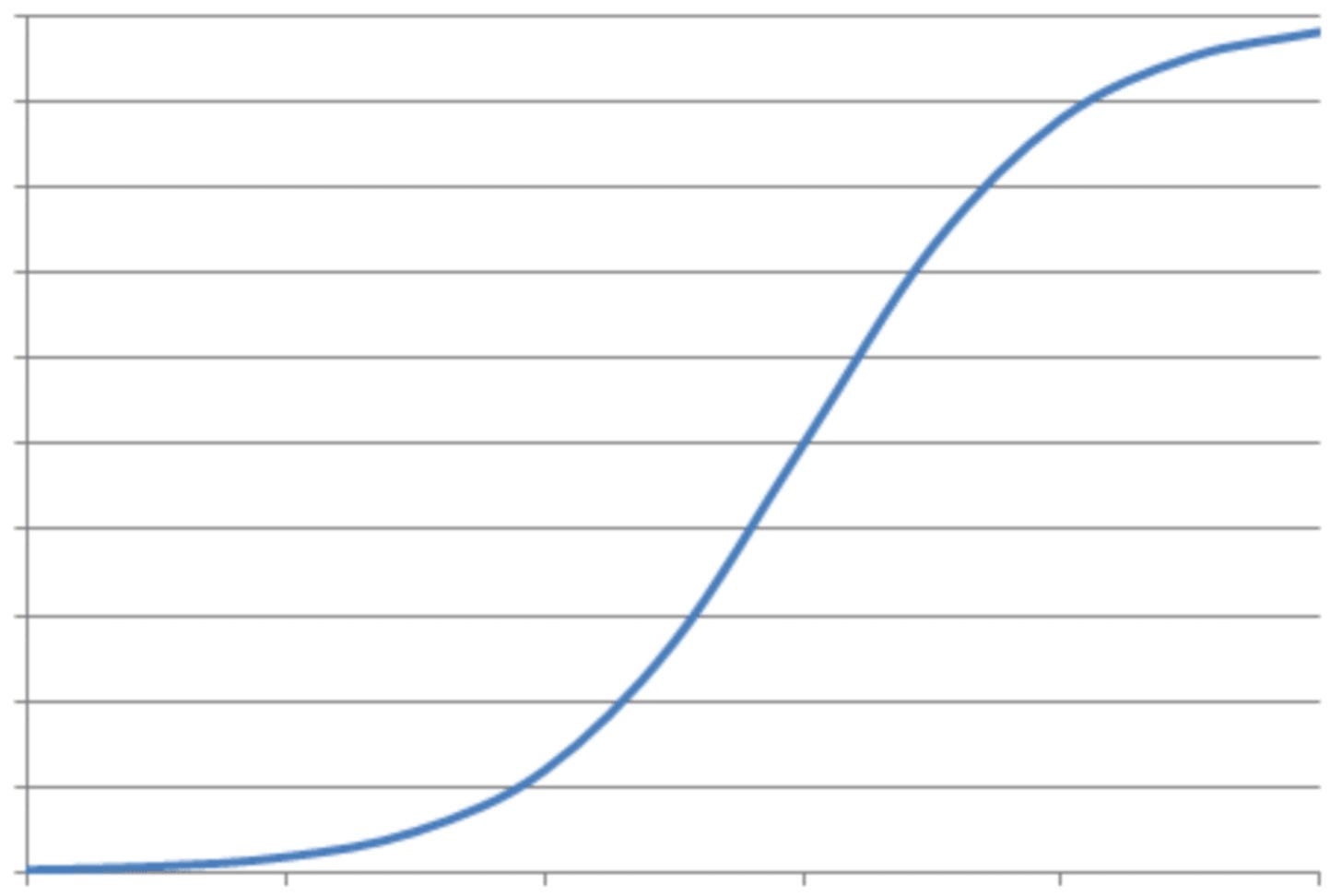

We’re all familiar with the idea of exponential growth, as illustrated by the adoption of a viral product, or the increase in chip complexity captured by Moore’s Law. When diminishing returns set in, the result is known as an S-curve:

Note that the first half of an S-curve looks exactly like exponential growth. Using Facebook3 as an example, this is the period where they were taking the world by storm; R&D spending went into initiatives with huge payoff, such as building core features. In recent years, having exhausted natural avenues for growth, Facebook has spent tens of billions of dollars developing the “metaverse”… yielding little return, so far.

Progress in AI might similarly follow an S-curve (or, more likely, a series of S-curves). We are currently in a period of exponential growth of generative AI. I believe this generative AI curve will eventually flatten out – at something well short of superhuman AGI. Only when we find ways of introducing capabilities such as memory and iterative exploration, which may require breaking out of the LLM paradigm, will rapid progress resume – for a while.

If you step way back, the simple fact that the Earth still exists in its current form tells us that no recursive process in the multi-billion-year history of the planet has ever spiraled completely out of control; some limit has always been reached. It’s legitimately possible that AI will be the first phenomenon to break that streak. But it’s far from guaranteed. Let’s consider how things might play out.

Impact Of Human-Level AI

In the near term, I expect the impact of recursive self-improvement to be minimal. Code-authoring tools such as GitHub Copilot notwithstanding, current AIs can’t offload much of the work involved in AI R&D4. If junior-level coding tasks were holding up progress toward AGI, presumably organizations like OpenAI and Google / DeepMind would just hire some more junior coders. Improvements to tools like Copilot will have only modest impact on R&D productivity, insufficient to trigger positive feedback.

As AIs reach roughly human level on a given task, they can free people up to work on other tasks. (Note that by “human-level”, we mean the typical person working to advance cutting-edge AI, which presumably includes a lot of highly talented folks.) When AI reaches this level at 50% of the tasks involved in AI R&D, that’s loosely equivalent to doubling the number of researchers. When 90% of tasks can be automated, that’s like increasing staff 10x. When 100% of tasks can be automated, we become limited by the cost and availability of GPUs to run virtual AI researchers; my napkin math suggests that the impact might be to (virtually) multiply the number of people working on AI by, say, a factor of 1005.

This sounds like it would have a colossal impact, but it’s not necessarily so. One estimate suggests that personnel only account for 18% of the cost of training a state-of-the-art model (the rest goes for GPUs and other hardware). Replacing the existing staff with cheap AIs would thus free up at most 18% of the R&D budget.

Increasing the number of workers by 100x might have a larger impact. We could expect a dramatic increase in the pace of improvements to model architecture, algorithms, training processes, and so forth. We’d have more innovations, be able to undertake more complex architectures, and be able to do more work to generate or clean up training data. However, the computing power available for experimental training runs will still be limited, so that army of virtual AI researchers will to some extent be bottlenecked by the impossibility of testing all the ideas they’re coming up with. And we are already beginning to exhaust some of the highest quality, most easily accessible sorts of training data. (Stratechery reports a rumor that “Google is spending a billion dollars this year on generating new training data”.)

The point where AIs begin reaching elite human-level performance across an array of tasks will also herald the arrival of one or more headwinds:

By definition, we will have outgrown the use of human-authored materials for training data. Such materials are fundamental to the training of current LLMs, but are unlikely to carry us past the level of human ability6.

Progress in any technical field becomes more difficult as that field progresses, and AI seems unlikely to be an exception. The low-hanging fruit gets picked first; as a system becomes more complex and finely tuned, each change requires more effort and has less overall impact. This is why R&D investment required to uphold Moore’s Law has increased drastically over time, and yet progress in chip metrics such as transistor count is finally slowing down7. As we approach human-level AGI, a similar phenomenon will likely be rearing its head.

Some technologies eventually encounter fundamental limits. The rocket equation makes it difficult to reach orbit from Earth’s gravity well; if the planet were even moderately larger, it would be nearly impossible. It’s conceivable that some sort of complexity principle makes it increasingly difficult to increase raw intelligence much beyond the human level, as the number of facts to keep in mind and the subtlety of the connections to be made increases8.

The upshot is that when AIs reach elite human level at AI research, the resulting virtual workforce will notably accelerate progress, but the impact will likely be limited. GPU capacity will not be increasing at the same pace as the (virtual) worker population, and we will be running into a lack of superhuman training data, the generally increasing difficulty of progress, and the possibility of a complexity explosion.

It’s hard to say how this will net out. I could imagine a period of multiple years where progress, say, doubles; or I could imagine that self-improvement merely suffices to eke out the existing pace a bit longer, the way that exotic technologies like extreme ultraviolet lithography have not accelerated Moore’s Law. I don’t think the possibilities at this stage include the potential for any sort of capability explosion, especially because the impact will be spread out over time – AI won’t achieve human level at every aspect of AI R&D at once.

(Many thanks to Jeremy Howard for pointing out that in many cases, AIs are already being used to produce training data – the output of one model can be used to generate training data for other models. Often this is “merely” used to build a small model that, for a certain task (say, coding), emulates the performance of a larger (and hence more expensive / slower) general-purpose model. This allows specific tasks to be accomplished more efficiently, but does not necessarily advance the frontier of performance. However, it may in fact be possible to produce cutting-edge models this way, especially by incorporating techniques for using additional computation to improve the output of the model that is generating the training data9. There is no guarantee that this would lead to a positive feedback cycle, but it at least opens the possibility.)

Impact Of Superhuman AI

What happens as AIs begin performing AI research at a significantly higher level than the typical researcher at the leading labs?

On the one hand, the impact could be profound. A team of – in effect – hundreds of thousands of super-von Neumanns would presumably generate a flood of innovative ideas. Some of these ideas would be at least as impactful as deep learning, transformers, and the other key innovations which led to the current wave of progress. GPU availability would still limit the number of large-scale training experiments we can run, but we would presumably get more out of each experiment. And superintelligences might find ways of getting high-level performance out of smaller models, or at least extrapolating experimental results so that large cutting-edge models could be designed based on tests performed on small models.

On the other hand, the headwinds discussed earlier will apply even more sharply at this stage.

It seems conceivable that this will balance out to a positive feedback loop, with AI capabilities accelerating rapidly within a few months or years. It also seems possible that the ever-increasing difficulty of further progress will prevail, and even superhuman performance – in this scenario, likely only modestly superhuman – will not suffice to push AI capabilities further at any great speed.

Of course, if AI progress were to run out of steam at a level that is “merely” somewhat superhuman, the implications would still be profound; but they might fall well short of a singularity.

How To Tell Whether We’re Headed For A Singularity?

At this point, I don’t think it’s possible to say whether AI is headed for a positive feedback loop. I do think we can be fairly confident that we’re not yet on the cusp of that happening. A lot of work is needed before we can automate the majority of R&D work, and by the time get get there, various headwinds will be kicking in.

Here are some metrics we can monitor to get a sense of whether recursive self-improvement is headed for an upward spiral:

First and foremost, I would watch the pace of improvement vs. pace of R&D inputs. How rapidly are AI capabilities improving, in comparison with increases in R&D spending? Obviously, if we see a slowdown in capability improvements, that suggests that a takeoff spiral is either distant or not in the cards at all. However, ongoing progress that is only sustained by exponential growth in R&D investment would also constitute evidence against a takeoff spiral. The level of R&D spending will have to level off at some point, so if increased spending is the only way we’re sustaining AI progress, then recursive self-improvement is not setting the conditions for takeoff.

(This might be especially true so long as much of the investment is devoted to things that are hard to advance using AI, such as semiconductor manufacturing10.)

Second, I would watch the level of work being done by AI. Today, I believe AIs are only being used for routine coding tasks, along with helping to generate or curate some forms of training data. If and when AI is able to move up to higher-level contributions, such as optimizing training algorithms or developing whole new network architectures, that would be a sign that we are about to see an effective explosion in the amount of cognitive input into AI development. That won’t necessarily lead to a takeoff spiral, but it is a necessary condition.

I would also watch the extent to which cutting-edge models depend on human-generated training data. Recursive self-improvement will never have a large impact until we transcend the need for human data.

Finally, I would keep an eye on the pace of improvement vs. pace of inference costs. As we develop more and more sophisticated AIs, do they cost more and more to operate, or are we able to keep costs down? Recursive self-improvement will work best if AIs are significantly cheaper than human researchers (and/or are intelligent enough to do things that people simply cannot do).

I think an AI takeoff is unlikely in the near to mid term. At a minimum, it will require AIs to be at least modestly superhuman at the critical tasks of AI research. Whether or not superhuman AI can achieve any sort of takeoff – and how far that takeoff can go before leveling out – will then depend on the trajectory of the metrics listed above.

This post benefited greatly from suggestions and feedback from David Glazer, Russ Heddleston, and Jeremy Howard.

I don’t know whether he still argues for the same “weeks or hours” timeline today.

I know this sounds like an Elon Musk / SpaceX reference, but it’s not meant to be, outer space is an important part of this narrative, it’s not my fault if one person has managed to associate himself with the entire concept.

I refuse to say “Meta”, that’s just encouraging them.

I’m not close to anyone at any of the cutting-edge AI research labs, so my opinion that tools like Copilot won’t have a big impact is based primarily on my own general experience as a software engineer. Contrarily, I’ve seen some folks opine that AI tools are already making a difference. For instance, back in April, Ajeya Cotra noted:

Today’s large language models (LLMs) like GPT-4 are not (yet) capable of completely taking over AI research by themselves — but they are able to write code, come up with ideas for ML experiments, and help troubleshoot bugs and other issues. Anecdotally, several ML researchers I know are starting to delegate simple tasks that come up in their research to these LLMs, and they say that makes them meaningfully more productive. (When chatGPT went down for 6 hours, I know of one ML researcher who postponed their coding tasks for 6 hours and worked on other things in the meantime.)

All I can really say is that I’m skeptical that the impact on the overall pace of progress is significant today, and I’d love to hear from practitioners who are experiencing otherwise so that I can update my understanding.

(I’m going to use some very handwavy numbers here; if you have better data, please let me know.)

According to untrustworthy numbers I googled, DeepMind and Google Brain have around 5000 employees (combined), and OpenAI has perhaps 1000. By the time we get to human-level AGI, let’s say that the organization which achieved it had 5000 people working directly or indirectly to support that goal (as opposed to, for instance, working on robotics or specialized AIs). Let’s say that another 5000 people from outside the organization are contributing in other ways, such as by publishing papers or contributing to open-source tools and data sets. So, roughly 10,000 talented people contributing to the first AI to cross the general-AI-researcher threshold. (I’m glossing over some other categories of contributors, such as the many folks in the chip industry who are involved in creating each new generation of GPU, associated software tools, high-speed networks, and other infrastructure.) Given the colossal stakes involved, this is probably a conservative estimate.

Let’s say that when human-level AI is available, one of the leading-edge organizations is able to run 200,000 instances, each running at 1x human speed. Here’s how I got there: ChatGPT reportedly has about 100,000,000 users, and OpenAI controls access to their various services in ways which suggest they are GPU-constrained. Let’s imagine that if they had human-level AI today, they’d manage to devote 2x as much computing power to AI research as they currently devote to ChatGPT. (Which is not 2x their total usage today, because ChatGPT does not include their various APIs, nor does it include the GPUs they use for training new models.) So that’s enough for 200,000,000 users. If each user accesses ChatGPT for an average of one minute per day, that’s enough for 140,000 simultaneous ChatGPT sessions. (One minute of generation per user per day might seem low, but I suspect it’s actually too high. ChatGPT can generate a lot of words in one minute, and average usage of large-scale Internet services is remarkably low. Most of those 100,000,000 users probably don’t touch the service at all on a typical day, and on days when they do use it, they may typically ask just a few questions. There will be some heavy users, but they’ll be a small fraction of the total.)

The first human-level AGI will likely use much more compute than ChatGPT, even with whatever algorithmic improvements we’ve made along the way. On the other hand, OpenAI – or whatever organization beats them to the punch – will have more compute by then. I’ll generously assume that these factors cancel out, meaning that they’ll be able to marshall 140,000 virtual AI researchers.

Those researchers will be working 24x7, so about four times more hours per year than a person. In my previous post, I also argued that they’ll get a productivity gain of “several” due to being more focused, having high-bandwidth communication with one another, and other factors. The upshot is that they might have the capacity of perhaps one million people. This paper, using entirely different methodology, arrives at a similar estimate: roughly 1,800,000 human-speed-equivalent virtual workers in 2030.

In We Aren't Close To Creating A Rapidly Self-Improving AI, Jacob Buckman explores the difficulty of constructing high-quality data sets without relying on human input.

Moore’s Law is a special case of Wright’s Law. Originally applied to aircraft production, Wright’s Law stated that for each 2x increase in the cumulative number of aircraft produced, the labor time per aircraft fell by 20%. In other words, the 2000th aircraft produced required 20% less labor than the 1000th. Similar effects have been found across a wide variety of manufacturing domains; the general form is that when the number of items produced doubles, the cost decreases by X%, where X depends on the item being produced.

Moore’s Law, and more broadly Wright’s Law, of course are usually viewed as representing the inexorable march of progress. However, the flip side is that each improvement of X% requires twice the investment as the previous improvement. Eventually this must peter out, as we are finally seeing for Moore’s Law. As Scott Alexander notes in ACX:

Some research finds that the usual pattern in science is constant rate of discovery from exponentially increasing number of researchers, suggesting strong low-hanging fruit effects, but these seem to be overwhelmed by other considerations in AI right now.

Or Zvi Mowshowitz, in Don’t Worry About The Vase:

We are not seeing much in the way of lone wolf AI advances, we are more seeing companies full of experts like OpenAI and Anthropic that are doing the work and building up proprietary skill bundles. The costs to do such things going up in terms of compute and data and so on also contribute to this.

As models become larger, it becomes more expensive to experiment with full-scale training runs. And of course as models and their training process become more complex, it becomes more difficult to find improvements that don’t interfere with existing optimizations, and it requires more work to re-tune the system to accommodate a new approach. Not to mention the basic fact that the low-hanging fruit will already have been plucked.

From Why transformative artificial intelligence is really, really hard to achieve, some additional arguments (note the links) for the possibility of fundamental limits on effective intelligence:

We are constantly surprised in our day jobs as a journalist and AI researcher by how many questions do not have good answers on the internet or in books, but where some expert has a solid answer that they had not bothered to record. And in some cases, as with a master chef or LeBron James, they may not even be capable of making legible how they do what they do.

The idea that diffuse tacit knowledge is pervasive supports the hypothesis that there are diminishing returns to pure, centralized, cerebral intelligence. Some problems, like escaping game-theoretic quagmires or predicting the future, might be just too hard for brains alone, whether biological or artificial.

Such as chain-of-thought prompting, tree-of-thought prompting, or simply generating multiple responses and then asking the model to decide which one is best.

AI may certainly be able to find better techniques for manufacturing semiconductors, or even replace semiconductors with some alternative form of computational hardware. However, semiconductors are an extremely mature technology, starting to approach physical limits for the current form, meaning that it’s hard to find further improvements – as evidenced by fact that Moore’s Law is slowing down, despite record levels of investment into R&D. It’s always possible that AI will eventually help us develop some new approach to computing hardware, but I would expect this to be a long way off and/or require AI to already have advanced well beyond human intelligence. The upshot is that in almost any scenario, if AI progress is requiring ever-larger investments in computing hardware, that would suggest there is no “foom” in the offing anytime soon.

Of course, AI may be able to reduce our need for silicon, by coming up with better training algorithms that need fewer FLOPs, and/or better chip designs that achieve more FLOPs per transistor and per watt. However, so long as we see leading-edge AI labs spending ever-increasing amounts on silicon, that’s evidence that such “soft” improvements aren’t proving sufficient on their own.

I can't say if "AI" in general will foom but I'm pretty sure LLMs can't.

My (maybe flawed?) reasoning is this:

LLMs are trained using a loss function. The lower the loss is, the closer they are to the "perfect" function that represents the true average across many-dimensional space of the training set.

Once its close to zero, how is it going to foom? It can't. Diminishing returns.

You might find some of the figures in the relevant part of this episode useful:

https://www.dwarkeshpatel.com/p/carl-shulman