Beyond the Turing Test

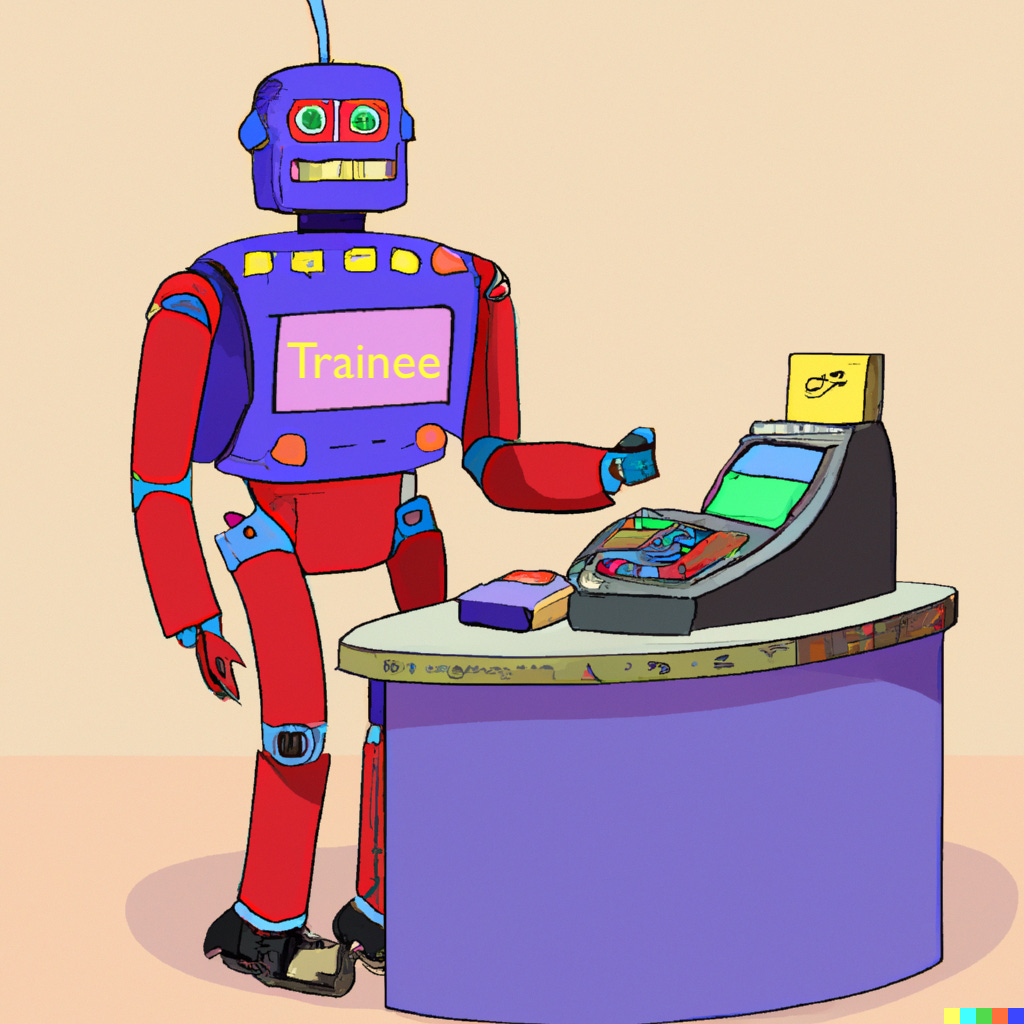

The key question about AI is no longer "can it think", but rather "can it hold down a job"?

A hot question of the day: when will AIs achieve human-level intelligence?

The topic is controversial, for many reasons: it’s emotionally charged, facts on the ground are changing rapidly, there have been past claims of human-level AI that look foolish in hindsight, doubters philosophically refuse to accept the possibility that a machine can “truly think”, zealots dismiss any skepticism as stemming from human parochialism.

As we progress toward an answer to the question “can a machine be intelligent?”, we are learning as much about the question as we are about the answer. This tweet (from 2018!) strikes close to home:

Activities that we used to believe required intelligence, such as playing chess or telling jokes, turn out to be within the reach of computer systems that clearly do not match our intuition of general intelligence. Five years ago, I think almost anyone would have said that acing the AP Bio exam would require substantial reasoning ability. Based on GPT-4’s performance, it now appears that it can also be done using shallow reasoning in conjunction with a ridiculously large base of knowledge. Who knew?

It has turned out to be difficult to pin down the concept of “human-level intelligence”. In this post, I’ll argue that nevertheless, we should be keep asking whether and when computers will reach this threshold, because that tells us important things about how to prepare for the future. And I’ll propose an approach that seems more grounded than past attempts.

The Turing Test Has Outlived Its Usefulness

Of course, the classic method for evaluating computer intelligence is the Turing Test. Originally described in 1950 by computing pioneer Alan Turing, who called it “the imitation game”, the basic idea is a three-way text chat session between a human examiner, a human subject, and a computer subject. The computer pretends to be a human being, and the examiner tries to figure out which subject is the computer. If the examiner is fooled, then the computer has passed the test.

People often misapply the label “Turing Test” to a simpler scenario where someone is having a one-on-one chat, and wasn’t told that their counterpart was a computer program. If they fail to notice, this is sometimes construed as passing the Turing Test. Instances of people being fooled in this fashion date back to 1965! A very simple program called ELIZA, designed to emulate a Rogerian psychotherapist (but not actually intended to fool anyone), gave simple canned responses that would sometimes mislead the innocent audience of the day. To get a sense of just how primitive ELIZA is, you can try it yourself online; here’s how my conversation went:

[Eliza] Hello, I am Eliza.

[Me] Nice to meet you. My name is Steve.

[Eliza] We were discussing you, not me.

[Me] I'm sorry, didn't we just now begin our conversation?

[Eliza] Do you believe it is normal to be sorry, didn't we just now begin our conversation?

I am not sure how faithful this version is to the original 1965 implementation, but it is well known that all ELIZA could do was parrot back a handful of canned phrases, along with snippets of things its conversational partner had said. It was a far cry from Siri, let alone ChatGPT. Even so, some users reportedly were convinced that they were talking to a person – in large part, no doubt, because in 1965 it was difficult to imagine any alternative.

This example makes it clear that with a casual examiner, a program that is clearly not “intelligent” can easily pass the Turing Test. Often the examiner merely seizes on one particular bit of behavior that they believe a computer would not exhibit. People have been fooled by a program that was deliberately programmed to make typos, or another that would give silly responses. In 1965, merely being able to use words was enough to throw some people off.

A robust Turing Test, then, requires an examiner with some expertise, who is making a real attempt to catch the computer out. Even then, the format – a limited-time chat session between participants who do not previously know one another – imposes limitations that may not have seemed important in 1950. In particular, as I discussed in my first post, large language models like GPT-4 do best at “shallow” questions that don’t require much context. They are much less able to deal with a question like “how should we update our product plan in light of yesterday’s announcement from our competitor?”, which requires a wealth of context and background knowledge. Unfortunately, that’s exactly the sort of question you can’t ask in a chat session.

Perhaps we could devise a long-form variant of the Turing Test, to prevent models like GPT-4 from skating through based on their ability to ad-lib. However, this would still be subject to the foibles of the examiner and the human subject. It also imposes a burden on the computer to act, not merely intelligent, but human; while that’s a fascinating exercise, it obscures the key question of intelligence.

Finally, the Turing Test is designed to give a yes / no answer: the computer is intelligent, or it is not. I think we’re past the point where such a simple classification is useful.

Human-Level Intelligence Is Not A Single Thing That AIs “Have” or “Don’t Have”

We’re way past the point where we can talk about intelligence as a simple scale, with a threshold clearly marked as “human level”, and AIs gradually progressing upward toward that threshold.

This has always been a shaky notion. After all, computers have steadily been surpassing humans in specific tasks that were once thought to require general intelligence:

In 1997, IBM’s Deep Blue defeated the reigning world chess champion.

In 2011, IBM’s Watson defeated two former champions on the trivia game Jeopardy, demonstrating a certain level of natural language understanding.

That same year, a neural network outscored the highest-performing human at a very different task: the “German Traffic Sign Recognition Benchmark” competition, scoring 99.45%, vs. 99.22% for the top human entrant.

In 2016, Google DeepMind's AlphaGo defeated world Go champion Lee Sedol; Go, with its large board, strategic complexity, and subtle interactions between pieces, had long been considered a particularly hard nut for computers to crack.

In 2019, a computer beat “top human professionals” in a poker tournament. Poker, involving multiple players and “hidden information” (you can’t see the other players’ cards), was also thought to be difficult to automate.

This year, GPT-4 outdid “90% of humans who take the bar to become a lawyer, and 99% of students who compete in the Biology Olympiad, an international competition that tests the knowledge and skills of high school students in the field of biology”.

And of course, computers are now even branching out into creative fields such as visual art (DALL-E, Midjourney), exhibiting abilities well above the average person.

Despite these achievements, the best AI systems fall far short of human performance in other ways. GPT-4 still suffers from “hallucinations” (i.e. making things up), and is shaky when it comes to novel tasks that it hasn’t pre-learned from reading the web. If a computer can defeat the world champions at Chess and Go, is competitive at activities ranging from the bar exam to poker to visual art, but sometimes makes up facts, contradicts itself, fails to learn from its own mistakes, and can’t follow a simple variation on the river-crossing puzzle, then it’s impossible to say whether it has achieved human-level intelligence. It’s on both sides of the line at once.

In short, asking “how smart is GPT-4” is like asking “how high-strung is a geyser”; the measurement simply isn’t applicable. Geysers and high-strung people both explode unexpectedly, but neither provides a good scale on which to evaluate the other. When computers were far short of intelligence, it didn’t matter exactly how we defined it. As they draw closer, simple measures no longer suffice. Instead of trying to decide whether computers “are” or “are not” human-level intelligent, we can only ask how they perform on particular tasks.

Should We Even Try To Compare Computers To Humans?

Given the difficulty of comparing AIs with people, should we even bother to try? I do believe it’s worthwhile; as I noted in an earlier post:

I think that one good yardstick is the extent to which computers are able to displace people in the workplace. This has a nice concreteness: you can look around and see whether a job is still being done manually, or not. That’s a helpful counterweight to the tendency to hyperventilate over the latest crazy thing someone got a chatbot to do…

The pace of workplace automation is also important because it helps determine the impact of AI on society. Automating a job has direct social impacts: costs come down, people have to find other work, and so forth. And if and when software starts displacing workers, that will create a gigantic economic incentive to create even more capable AIs. If there is a “tipping point” in our future, this is probably one of the triggers.

So, it’s important to compare computers to humans, because their relative ability will have a huge impact on how the AI revolution plays out. (Another reason: if you are worried about computers someday taking over the world, then AIs achieving sufficient competence to displace human workers is probably a useful warning sign that they are approaching the level of sophistication necessary to seize power.)

The Job Market Is a Litmus Test for Intelligence

This motivation for comparing computers to people points toward a specific way of carrying out the comparison: we can define human-level performance on a task as the level of performance that would result in humans no longer being employed to perform that task1. This is objectively defined, unambiguous, and not subject to cherry-picking or spoofing though clever demos. And it directly relates to the important question of AI’s impact on society.

Unfortunately, the obvious means for measurement – counting the number of people who are displaced by AIs in the workplace – has some drawbacks:

For any given task, there may not be any easily available data to quantify human employment for that task.

Friction and inertia effects may result in humans continuing to be paid to perform a task that computers could do just as well. For instance, it might be part of a larger job that can’t be fully automated; employers might be slow to adapt, or hesitant to trust an AI that exhibits a novel mix of strengths and weaknesses; regulations (e.g. for medical or legal work) might require that a licensed person perform the task.

It’s a lagging indicator; we won’t know that an AI is approaching human capabilities on a given task until well after the threshold is crossed.

It doesn’t apply to non-economic activities.

Observing the job market also does not give us a way to evaluate new AIs as they are developed. Sticking with “employability” as our gold standard, can we find a more nimble way of measuring how a given AI stacks up?

The working world already has the concept of measuring someone’s fitness for a job without actually employing them in that job. It’s called the “interview process”. Unfortunately, the standard interview process won’t work for AIs.

We Can’t Hire AIs The Same Way We Hire People

The traditional process for screening job candidates may begin with a resume review, followed by a short “phone screen” conversation, and then a series of interviews. For the field I’m most familiar with, software engineering, the interviews are sometimes complemented by a brief coding exercise, lasting anywhere from half an hour to several hours.

There is much debate as to how well these techniques actually work for evaluating people. For an AI, they are hopeless. As roboticist Rodney Brooks said in 2017:

We [humans] are able to generalize from observing performance at one task to a guess at competence over a much bigger set of tasks. We understand intuitively how to generalize from the performance level of the person to their competence in related areas.

In a recent blog post, he added:

But the skills we have for doing that for a person break down completely when we see a strong performance from an AI program. The extent of the program’s competence may be extraordinarily narrow, in a way that would never happen with a person.

In particular, the sorts of questions that come up in a standard interview process are “low-context”, as there isn’t much time to walk through a detailed scenario. As I keep arguing in past posts, “low-context” tasks are shallow, and fail to exercise the weaknesses of current language models. If we’re going to “hire” AIs – i.e. decide whether they are competitive at a particular job – we’ll need a new approach for evaluation.

Trial Projects To the Rescue?

It is said that the best way to evaluate someone’s fitness for the job is to have them do the job, i.e. to carry out a representative project. And indeed this approach is sometimes used for hiring, under the label “trial project” or “trial period”, and can work very well.

We could use a similar approach to evaluating AIs. For instance, to evaluate an AI as s software engineer, we could give it a substantial coding project – something that would take a skilled person hours, days, or ideally even longer – and see what it’s able to accomplish. Such a task would exercise higher-level skills such as planning, experimentation and refinement, and debugging.

This would be considerably more complex than the Turing Test. We’d have to define a suitable task, or preferably a collection of tasks. It would take time for the computer to make a proper attempt at completing the tasks. Grading the results is nontrivial; a project likes this does not yield a result which is simply “correct” or “incorrect”. For a coding assignment, it’s necessary to evaluate how well the program meets the requirements, how efficient, elegant, and maintainable the code is, and so forth. Other domains will have other considerations.

It’s possible that we can find smaller, simpler, quicker tests that do a good job of evaluating an AI’s potential to do actual work. Per Rodney Brooks’ comments earlier, this may not be easy, and as AI designs evolve, we’ll have to constantly be on the lookout for divergence between results on the simple test and ability to perform a real job. A good test should somehow depend on a significant amount of contextual information (information that would be learned by an employee who had been on the job for some time), and take humans an extended period of time to carry out. This is necessary to exercise learning and exploration / refinement skills that are central to many real-world tasks. If we want to develop a smaller, quicker test for some particular task, we would need to somehow convince ourselves that it tests the same abilities as the corresponding real-world task.

For jobs that involve a lot of interaction, e.g. with co-workers or customers, I don’t see how to devise a self-contained project that covers the important job skills. But hopefully, the lens of “could the AI hold down a real job” will help us come up with ideas. In any case, we need to recognize that AI has progressed to the point where evaluating performance is inherently difficult.

The Benchmark For A Given Task Is A Person Who Would Typically Perform That Task

In discussions of “human-level intelligence”, people sometimes ask: which human? For instance, if we want to evaluate artistic ability, should we compare to the average person (who may barely be able to draw), the average career artist, or Da Vinci?

If the question is whether an AI is ready to displace human workers for a given task, it follows that we should compare it to the humans who currently perform that task in the workplace. This will generally represent “above average” performance, because people do things they are good at, and/or become good at the things they do. Per Richard Ngo:

For most tasks, skill differences between experts and average people come from talent differences + compounding returns of decades of practice. Hence why AI often takes years to cross the range of human skill despite the range of human intelligence being relatively small.

Yes, I’m Moving the Goalposts

The following sequence of events has played out repeatedly over the years:

Some task is believed to require intelligence. (Playing chess, natural language processing, image recognition, driving a car.)

A computer successfully carries out the task.

People decide that the system in question is not “truly intelligent”, and therefore in hindsight, the task did not require intelligence.

AI enthusiasts complain about “moving the goalposts” – retroactively changing the criteria for intelligence after a computer has met the previous criteria.

I’m proposing that we replace the Turing Test with the ability to hold down a job, and I’m sure this will spark more cries of “you’re moving the goalposts again!”. To this I respond: complain to the referee. What’s that you say, there is no referee? That’s right, because this isn’t a game, and there are no rules. The Turing Test was a useful concept, but we’ve outgrown it. We keep moving the goalposts, because it turns out to be really really hard to anticipate where the goalposts belong, and also there seems to be more than one goal. Get over it.

Wrapping Up

Historically, questions regarding artificial intelligence were primarily philosophical. Nowadays, they are ruthlessly practical: do I need to pay someone to do [X], or can a computer handle it for me? On the flip side: do I still have a job?

In the philosophical era, the Turing Test was the gold standard. Going forward, a single binary test is no longer sufficient; each area of economic activity is its own question, and the gold standard will become “can a computer hold down the job?”

Hopefully, we can develop test procedures that are less cumbersome than actually placing a computer into the workforce and observing its performance. However, this is challenging enough even for actual people: hiring pipelines are notoriously poor at identifying good performers, despite decades or centuries of history to draw on, and millennia of learning to understand people in general. In any case, thinking about AI capabilities through the lens of the job market will help us measure those aspects of AI performance that will be most important in determining the impact on society.

Alan Turing image credit: MARK I photo album, via phys.org.

Further Reading

A fun riff on the Turing Test by Scott Alexander (Astral Codex Ten). Two quotes to whet your appetite:

You wanted quicker burger-flipping; instead, you got beauty too cheap to meter.

With a strong enough hydraulic press, we can wring the meaning out of speech, like wringing oil from shale, and when we do that, there’s nothing left we can grab onto.

This yardstick could be thrown off by factors other than “intelligence” / ability. AIs will presumably be cheaper than human workers, they may be faster, certainly they will be able to work a reliable 24-hour day. This could result in some workers being displaced in practice before the AI has, strictly speaking, exceeded human ability. Individual examples of this are already being reported, because “computer steals job” is a great attention-getter. My guess is that the overall impact of speed and cost on the pace of workforce displacement will be limited, on the basis that quick, cheap, crappy work will only win out in a relatively small set of jobs, especially once the novelty wears off – but we’ll see!

Much better than my proposed alternative, can it create an idiom?