Get Ready For AI To Outdo Us At Everything

We have time to prepare; step one is acknowledging what we're preparing for

In the initial installments of this blog, I’ve focused on the current state of AI. I’m now going to shift toward the future, starting with the question: how far should we expect AI capabilities to progress? How smart will these things get?

As the saying goes, “we tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.” The current hype cycle around ChatGPT marks us as firmly in the “overestimate” phase. It’s a groundbreaking technology, but not world-breaking. Over the next few years, the impact of AI will be substantial, but not profound. A lot of boring work (and a scattering of less-boring work) will be automated. Discovering information will get easier. Some jobs will be lost, others created; the flood of questionable content on social media will ramp up further, but the junk will mostly just displace other junk.

About that long run, though. While GPT-4 is limited in important ways, it does highlight the power of modern deep learning techniques. I think we can now predict with some confidence that machines are going to greatly outstrip human capabilities in essentially all areas, in a time frame measured in decades.

What Do You Mean, “Outstrip Human Capabilities”?

What it sounds like. I expect that in the coming decades, we will see computers that are smarter than us, faster than us, defter, wittier. Better at writing code, picking stocks, launching products, diagnosing illness. Probably better at management, strategy, teaching, counseling, writing novels, directing plays. Everything.

This is, needless to say, both awe-inspiring and terrifying. The implications range from “curing cancer” to “100% unemployment rate”, and go on from there. It sounds crazy as I type it, but I can’t look at current trends and see any other trajectory.

For now, I’ll exclude anything involving the physical world, from washing dishes to installing dishwashers. I do expect the era of the ultra-competent robot to arrive eventually, but I won’t attempt to predict the timeline. The human body packs a remarkable range of abilities into a compact package that runs on just a few hundred watts of power. We are a long ways away from being able to engineer a robot that can lift a TV, thread a needle, climb a hill, and crack an egg, all on a portable power supply that runs for hours or more without recharging. Multiple breakthroughs will be needed to build both the physical machinery, and the software to control it. I wouldn’t rule out the possibility of this happening in the next few decades, but I’m not ready to assert that it will.

I’m also going to waffle on anything involving artistic creativity or personal relationships. I suspect we will see artificial poets, managers, therapists, and novelists, on a timeline not much slower than the more prosaic skills I’ll focus on in this post; but I don’t currently feel prepared to back up that intuition with coherent arguments. In any case, if AIs merely become superior to people for a broad range of less-creative, less-interpersonal tasks, that still has profound implications.

It may be worth noting that these three areas of skill – physical, interpersonal, and intellectual – arose in that order in evolutionary history. So perhaps we should expect that our intellectual skills will be the easiest to match, and our physical skills the hardest, having been refined over hundreds of millions of years.

In subsequent posts, I’ll explore the implications of AI superiority, how we might think about it, and – most important – how we can steer toward positive outcomes. In this post, I’ll explain why I do believe that machines will overtake us intellectually, and will do so within a matter of decades.

The Argument In Brief

There is nothing magic about our brains, at least regarding intellectual ability; anything they do, can in principle be done by a machine.

Neural nets, in the way we understand them today, are a sufficient sort of machine.

If machines can approximate human intellectual ability, they can exceed it.

I’ll explain each step briefly, and then expand each one in its own section.

Step 1 states that our cognitive abilities, that allow us to diagnose illness, make scientific discoveries, and process insurance claims, rely solely on signals passing from neuron to neuron through chemical processes involving synapses, neurotransmitters, and so forth: mechanisms that can be replicated artificially. In other words, if there is anything extra-scientific or merely “exotic” taking place inside the brain, such as a soul or Penrose-Hameroff microtubule quantum effects (do not ask), it is not important to our intellectual capacity.

Step 2 states that the basic approaches we use today to design and train neural nets such as GPT-4 are sufficient to reproduce human intellectual ability. I do not expect that a straightforward extrapolation of current large language models (LLMs) – i.e. a hypothetical GPT-5, GPT-6, or GPT-7 – will suffice. I’ve previously written about significant gaps between current LLMs and human performance on a task such as software engineering; I don’t think that we can close those gaps simply by adding even more training data and alchemical tweaks. But neither do I think that we’ll need any fundamental breakthroughs in our approach to neural networks. I think the needed improvements will be of a similar order to the development of the “transformer” architecture that enabled the development of ChatGPT: some new ideas in the specific design of the neural net and the way it is trained, but not a wholesale rethink.

Step 3 states that the wheel of progress is unlikely to grind to a halt just at the point where AI has matched human ability. Just as a forklift can carry more than we can, AIs will be able to think harder than we do.

I think the argument for #1 is extremely strong, and #3 is very strong. #2 relies more on intuition and handwaving. See what you think, and please share any skeptical thoughts in the comments!

There’s Nowhere Left for Magic to Hide

There is nothing magic about our brains, at least regarding intellectual ability; anything they do, can in principle be done by a machine.

Again, at this step I am merely arguing that in principle, it should be possible to construct a machine that can think as well as a person does. Borrowing from my post on how to evaluate machine intelligence, I’ll define this as the ability to hold down a wide range of knowledge-worker jobs, from journalist to software engineer to insurance adjuster.

This is a narrow definition of “thinking”. I’m not going to touch the question of whether a machine can have feelings or consciousness (as opposed to convincingly pretending to have those things), let alone a soul. If you know what a p-zombie is, I’m not going there; if you don’t, count yourself lucky1. This will be a qualia-free zone.

Under this narrow definition, my guess is that most readers of this blog will take it as a given that a machine could think – or, if you prefer, that it could act as if it is thinking. That this would not require any divine intervention or magic. Still, I’ll take a little time to reinforce the idea.

I’ll come at it from both ends: what evidence do we have that the brain (or those aspects of it necessary for thinking) is just a machine? And what evidence do we have that artificial machines can think?

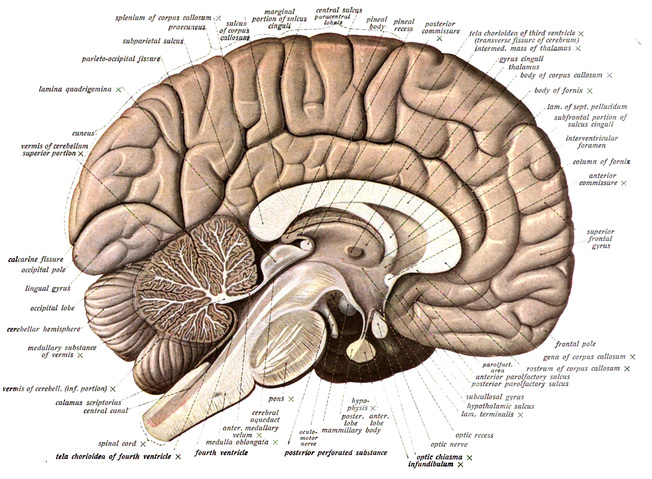

Regarding the brain, we’ve puzzled out the internal mechanisms to a remarkable level of detail. We understand how the brain is divided into regions – the amygdala, hippocampus, temporal lobes, and so forth. We can see how neurons connect to one another, we know the neurotransmitters that flow across those connections, we can observe connections becoming stronger as we learn, we can observe unnecessary connections fading away.

There is still much that we do not understand, but we have already found innumerable ways in which the chemical details of the brain align with the observed behavior of “thinking”. Animals with larger brains are generally more intelligent. Physical damage to the brain results in impaired thinking. fMRI scans show patterns of neural activity that correspond to thought patterns, to the point where we are beginning to be able to use fMRI images to perform crude mind reading tricks. In patients undergoing brain surgery, we can observe that stimulating a single neuron can trigger a specific mental image. We can trace the specific neural circuits responsible for some reflexive actions. If the electrochemical processes in the brain are not carrying out the actual work of thinking, someone is going to an awful lot of trouble to make it seem as if they were.

Working from the other end, machines have been steadily knocking off problems that come closer and closer to “thinking”. Initially, computers were only able to perform narrow, rigid tasks, such as playing chess, and they did it in ways that were clearly very different from how people do it. But in recent years, progress in deep learning has yielded programs like DALL-E and ChatGPT, which can handle a much broader array of tasks. Importantly, they seem to do so in a manner somewhat more akin to human thought, exhibiting quirks and errors that, while not completely human, seem more human-like. They aren’t all the way there yet, but if you want to claim that there is a line that computers will never be able to cross, it’s getting tougher to draw it in a place that computers haven’t already reached.

Between the neurological evidence that thinking is based on chemical processes, and the practical demonstrations that silicon computers can do things that look more and more like thinking, I’m not sure there is any remaining room for “magic” to hide in our heads.

Deep Learning Is Deep Enough

Neural nets, in the way we understand them today, are a sufficient sort of machine [to create a general intelligence].

As I mentioned earlier, this is probably the weakest step of the argument. I believe that it is likely to be true, but with less confidence than the other two steps. In any case, if it turns out that some fundamental breakthrough is necessary to create human-level artificial intelligence, that would not invalidate the idea that AIs will eventually exceed our abilities. It would merely push back the timeline. I’ll explore the question of timelines more deeply in a subsequent post.

The intuition I’m trying to express is that, with the recent development of techniques that allow us to train very large and capable neural networks – the sorts of techniques that led to programs like Midjourney, DALL-E, AlphaFold2, and ChatGPT – we are now on a path of steady upward progress that will lead to human-level general intelligence.

This would be a departure from past experience. Previous approaches to AI, such as “expert systems” and “symbolic systems” (aka GOFIA, for Good Old-Fashioned AI, the sorts of ideas that dominated the field for much of the 20th century), achieved success for some specific tasks. They enabled computers to play chess and detect credit card fraud. However, these systems were generally brittle, and success on one task often did not lead to success on others. Progress moved in fits and starts, with many disappointments, leading to multiple periods of “AI winter” (a temporary collapse of funding and research activity after overblown expectations weren’t met).

More recently, by contrast, AI has been moving from strength to strength. In the last 7 years, we’ve seen computers match or exceed typical human performance at tasks ranging from Go and Poker, to creating visual images, to recognizing speech. There have been astonishing strides in the difficult task of predicting the structure of protein molecules. And most recently, of course, the rapid progress of large language models, as exemplified (in rapid succession) by GPT-3, GPT-3.5, and GPT-4.

All of these systems are based on neural networks. All exhibit a flexibility and robustness that is a bit more like human intelligence, and much less like the brittle expert systems or symbolic systems of old. And all of them are based primarily on the neural network “learning” its task through training on repeated examples, rather than having to be explicitly programmed with knowledge of chess, or protein structures, or English grammar.

The human brain is incredibly adaptable. Some novel I once read noted that our brains are “designed to haul our sorry asses across the savannah, and not much upgraded since then”. And yet we can determine the structure of the atom, or engineer a suspension bridge. A child born blind will devote more of their brain to other senses. Most people can learn to juggle merely by trying and failing for a few hours.

Traditional software never exhibited this sort of flexibility; computers are notoriously inflexible. But neural networks appear to be startlingly adaptable; a network trained for one task often can be quickly adapted to quite different tasks. Deep learning seems to handle almost any task which we can pose in a way that allows for incremental success and vast amounts of training data. The analogy to human brains is not perfect; for one thing, our current process for training neural nets is rather different from the way people learn. But we seem to be in the ballpark, in a way we’ve never been before.

In What Will It Take For An AI To Do My Job?, I attempted to outline the missing capabilities that would need to be addressed before AIs can undertake serious software engineering. The list includes things like memory and exploratory problem solving, which strike me as tractable within the existing deep learning paradigm. We’ll likely need some clever new ideas for network architecture. And we’ll need to find a way to present the task of exploratory problem solving in such a way that a network can be trained on it. Given the scale of resources being poured into the field, these seem achievable.

I’ll wrap up this section by noting that in the past, breakthrough achievements have fallen into two categories. The first is things that are barely possible, straining the limit of the underlying science. For instance, the Apollo moon landings required every component of the rocket to be optimized to the utmost of our abilities: the most powerful engines, the thinnest hull, the minimal power supply. Such hyper-optimized solutions tend not to be applicable beyond the exact purpose for which they were originally developed3.

The second category generally involves finding a new way to take advantage of basic capabilities that were already present. For example, the Internet was a new way to use existing computers and connections (such as telephone lines). The iPhone app store was just a new way to use the phone’s general-purpose processor and data connection. These were both flexible, robust systems, and each triggered a Cambrian explosion of use cases.

Deep learning in general, and large language models like GPT in particular, seem to fall firmly in the latter category, meaning that we can expect further rapid progress. This reinforces my belief that we will find ways to close the gaps between GPT-4 and more general intelligence.

Once Machines Make It Onto the Playing Field, They Win

If machines can approximate human intellectual ability, they can exceed it.

The third leg of my argument is the assertion that, once we are able to create a AI that performs general intellectual tasks at a human level, we will then progress to create systems even more powerful than that. I’ll present several justifications for this assertion.

1. There’s no reason to believe that human ability, specifically, represents any sort of fundamental limit of intelligence.

Human abilities result from the vagaries of evolution: the needs imposed on us by our environment, and the capabilities that were possible within the limits of our body structure, calorie intake, and so forth. Pick any human attribute – strength, endurance, intelligence, creativity, wisdom, dexterity, memory. I’m not aware of any principled argument that human ability on any of these axes represents any sort of limit. Many animals exceed us in physical attributes. Computers have been steadily picking off intellectual achievements. To argue that there is something special about human-level intelligence, such that a machine could not exceed it, you would have to explain:

Why this limit does not apply to areas in which machines have already outclassed us, from playing chess to predicting protein structures.

Why it is possible to reach the fundamental limit of intelligence in a brain weighing just three pounds, designed to fit through the birth canal and operate on about 20 watts of power4. In other words, why a brain not subject to these constraints could not be more intelligent.

Why we periodically see individuals of special genius – John Von Neumann, Srinivasa Ramanujan – who can perform intellectual feats that seem otherwise superhuman. If human ability is a limit, why can these individuals exceed it? Or is the limit somehow defined by these rare individuals?

In short, any suggestion that we are the smartest beings that could possibly exist, is probably akin to a primitive metallurgist speculating that his charcoal fire is the hottest thing that could ever exist.

2. Evolution had no reason to push us toward the kinds of abilities that are critical to knowledge work today.

Recall that I am anchoring my concept of “human-level intelligence” on the ability to hold down a wide range of knowledge-worker jobs. Many of the activities involved in such jobs are relatively new in human history. Go back a few hundred years – an eyeblink, in evolutionary terms – and most people earned their living via farming or other low-tech occupations. There was little need for to work with complex abstract systems, or stay abreast of a perpetual flood of new scientific research. We haven’t been scientists and technologists for very long; there’s no reason to believe we are evolutionarily predisposed to do the best possible job of it.

3. Historically, whenever machines match human ability in a given area, they quickly exceed it.

Any low-end car can go much faster than a person can run. A forklift can carry vastly more than a weightlifter. Human players can no longer seriously contest the best chess programs. It would be ludicrous to even compare a human librarian to Google for speed and breadth of information retrieval, or a mathematician to a computer for computing digits of π5. Once machines are able to seriously compete with average human ability at a given task, they typically roar right on past, into superhuman territory.

Of course, while machines routinely exceed human ability at specific tasks, historically they do so in narrow ways. A car will outdistance a person on a paved road, but not in a dense forest, steep mountainside, or interior hallway. An Apple II can be programmed to compute more digits of π than any person could, but can’t develop new mathematical theorems. That’s why the flexibility and generality of systems based on deep learning is so important6.

4. AIs will have massive strengths to compensate for any weaknesses.

In What GPT-4 Does Is Less Like “Figuring Out” and More Like “Already Knowing”, I argued that GPT-4 uses a massive knowledge base to compensate for shortcomings in reasoning ability. To work around any shortcomings, future AIs will be able to draw on a variety of strings, likely including (but not limited to):

Speed: GPT-4 can already type quite a bit faster than I can read or talk. It’s hard to say for certain how the processing speed of a hypothetical “generally intelligent” AI will compare, but Moore’s Law hasn’t fully run its course yet, and there’s plenty of room for further improvements in neural network architecture and chip design. At a guess, the AIs of a few decades from now will be able to think much faster than we do, giving them the time to reason their way through any tasks that they find difficult.

Endurance: the ability to plug away at full capacity, 24 hours a day, 365 days a year, without getting tired, bored, unmotivated, distracted, or sick. I don’t think enough time has elapsed for me to use that Terminator meme again, but seriously, It Will Not Stop until it has finished whatever damn thing you told it to do7.

Numbers: again, this is just a guess, but it seems likely that future AIs will be cheaper than a paid human employee, especially when considering overhead: not just benefits and (for those who still use it) office space, but hiring, management, and other soft costs. This implies that it would be economically feasible to use multiple AI instances, possibly many instances, to replace a single person. And I’d expect AIs to work together more effectively than we can, for a variety of reasons, beginning with the fact that instances of the same base AI should have few problems with miscommunication.

Knowledge base: already today, GPT-4 has a far broader base of general knowledge than any human.

Integration: people are already extending language models with “plugins”, giving them access to everything from databases to Wolfram Alpha to the ability to write and execute custom programs on the fly. This integration will only become deeper over time, to the point where an AI will be able to run database queries, perform complex mathematical operations, or look something up in a technical manual as casually as you glance at your watch to tell the time8.

Wrapping Up

I think the evidence is very clear that, at some point, machines will exceed human intelligence. We can see that there is no magic, nothing beyond the reach of science, in the mechanisms that allow us to perform intellectual tasks. And for things that fall within the reach of science, we have consistently found ways to create machines that outperform people.

I furthermore think that we will find ways of accomplishing this within decades, without any fundamental scientific advances. The evidence here is admittedly fuzzier, and I’ll try to develop these ideas further in a subsequent post. But at a minimum, I think we must assume that there is a strong possibility that AI with profoundly impactful capabilities will emerge in the coming decades, and therefore that it is time to begin thinking about the implications.

Of course this is, as the expression goes, “huge if true”. I’ll be discussing the implications in subsequent posts. If you don’t find these ideas convincing, I would love for you to say so in the comments, as that means I have something to learn, I haven’t expressed myself well, or both. What ceiling do you see on machine intelligence? What imposes that ceiling? If you think my suggested timeline is too aggressive, what aspects do you think will take longer to develop?

Finally: I’m looking for folks to provide feedback on drafts of future posts. No expertise is required: while I appreciate feedback on technical points, I’m equally interested in knowing whether these posts are comprehensible and interesting. If you’d be interested in looking at advance drafts, please drop me a line at amistrongeryet@substack.com.

OK fine. “P-zombie” is short for “philosophical zombie”. Per Wikipedia: “A philosophical zombie argument is a thought experiment in philosophy of mind which imagines a being that is physically identical to a normal person, but does not have conscious experience.” In other words, an entity that looks and acts exactly like a person, but somehow isn’t “really there” on the inside; such a being would act as if they had an internal experience, felt emotions and pain, and so forth, but would actually be a hollow automaton that lacked those things.

Is it possible for us to create a machine that convincingly acts like a conscious entity, but is actually a p-zombie? Is it possible for us to create a machine that is not a p-zombie? Are you and I, somehow, actually p-zombies? I ain’t going there.

A system for predicting the 3-D structure of protein molecules.

Famously, the space race helped spur the development of many technologies which found applications elsewhere, from solar panels to integrated circuits. However, the major components of the Apollo program, such as the Saturn V rocket, were only used a few more times after the moon landings, mainly for Skylab.

The brain consumes a few hundred calories per day, which translates to about 20 watts, though of course it will vary by individual and time of day.

Which is not to say that librarians or mathematicians are of no use today - far from it! – just that they’ve moved on to other tasks.

See, for instance, the Sparks of Artificial General Intelligence paper from Microsoft Research.

Or, ahem, whatever damn thing it has decided to do instead. I’ll get to “alignment” problems in another post.

Granted, for myself and many other folks nowadays, glancing at a watch to tell the time is actually a slow process; step one involves driving to the store to buy a watch. But allow me my colloquialisms.

Architecturally, we don't know how to get really adaptive, goal-oriented behavior. I don't think this is a transformer-sized problem - this is the problem it took hundreds of millions of years of evolution to solve. Language, on the other hand, just took a few hundred thousand years after the hard part of adaptive organisms was solved. Or as Hans Moravec put it, abstract thought "is a new trick, perhaps less than 100 thousand years old….effective only because it is supported by this much older and much more powerful, though usually unconscious, sensorimotor knowledge." See the NeuroAI paper from Yann LeCun and others: https://www.nature.com/articles/s41467-023-37180-x

That doesn't mean AI won't surpass us at many tasks, but general-purpose agents (give them a very high-level goal and walk away) would likely require more than one breakthrough.

> The implications range from “curing cancer” to “100% unemployment rate”, and go on from there. It sounds crazy as I type it, but I can’t look at current trends and see any other trajectory.

If you want a counterexample to get your imagination going, a good one is driverless cars. Somehow, they are better (safer) but not good enough for widespread use? We have high standards for machines in safety-related fields. People are grandfathered in, even though we're often bad drivers. And there's no physical reason driverless cars can't work, no fundamental barrier.

Going from AI to "curing cancer" seems like an absurd overreach? There are new treatments, often very good, but they didn't need AI, and it's not clear how useful AI will be. Also, I would put medicine in the physical realm, which you've said you want to exclude?

It seems like this is easier to think about if we ban the word "intelligence" (poorly defined for machines) and just talk about tasks. It's often true that, for a given well-defined task, once a machine can do it as well as a person, the machine can be improved to do the task better. Or if not better, cheaper. Lots of tasks have been automated already using computers, and I expect it to continue. We can also change the task to make it more feasible for a machine to do it. It happens all the time.

But beware survivorship bias. The machines you can think of survived in the marketplace because they were better along enough dimensions to keep using. But there are also many failures.