Dario Amodei, the CEO of Anthropic, recently predicted that AGI (loosely, AI that can do anything people can do) will most likely arrive within a handful of years. He cites the rapid rate of progress:

…if you just eyeball the rate at which these capabilities are increasing, it does make you think that we’ll get there by 2026 or 2027… I think the most likely is that there are some mild delay relative to that. I don’t know what that delay is, but I think it could happen on schedule. … We are rapidly running out of truly convincing blockers, truly compelling reasons why this will not happen in the next few years.

Meanwhile, critics argue that AI may be plateauing. They point to a general lack of advancement in core intelligence since the release of GPT-4 in March 20231, the continued tendency for models to make simple mistakes (such as incorrectly counting the number of r’s in “strawberry”), the “slop” quality of much AI writing, and the lack of success in creating models that cannot be tricked into misbehaving or revealing private data.

Who is right? Is AI advancing rapidly, soon to be able to do everything people can? Or is AGI still a long way off?

This is a false dichotomy. AI has not been plateauing; but AGI is not near at hand. Progress toward a destination is not just a question of how quickly you travel; it also depends on how far you have to go.

Some Things AI Can’t Yet Do

Here are some tasks you can’t yet hand to an AI: managing a room full of third-graders. Writing a tightly plotted novel. Troubleshooting an underperforming team. Planning a marketing campaign. Reminiscing with an old friend.

You might object that most of these are “cheating”. Of course an AI can’t manage a third-grade classroom, you need a physical presence for that. Of course it can’t reminisce with an old friend, it doesn’t know your history together. But that’s exactly my point: most of the time, when we evaluate AI capabilities, there are all sorts of tasks that we don’t even consider, because AIs aren’t yet eligible to attempt them.

This is a theme I bring up repeatedly2, because it’s widely under-appreciated. We evaluate AI on tasks that are easy to evaluate – passing the bar exam, rather than practicing law. A task is easy to evaluate if it can be neatly encapsulated (doesn’t require a lot of outside context) and has clear right and wrong answers. These also happen to be precisely the easiest tasks to train an AI on. We look at AI through AI-colored glasses, no wonder it appears so bright.

As things stand today, it would be nonsensical to give a general AI a task that requires extensive context (troubleshooting a team), satisfying a large web of intricate constraints (writing that tightly-plotted novel), or juggling a complex set of variables (nothing is more complex or variable than a room full of third-graders). When we think about what AIs can and can’t do, our attention is drawn to the plausible frontier. We don’t consider tasks for which current AIs aren’t even on the playing field.

Dragons Lie Ahead, Probably

None of this is proof that AGI is far away. But it’s suggestive. Because AIs are still ineligible to attempt large swathes of human endeavor, we don’t have any visibility into the challenges that might await. Maybe all of the necessary behaviors will come “for free” as the big AI labs continue to scale up, but it seems unlikely.

For an analogy, suppose a robot is developed which can win Olympic gold at downhill skiing, followed closely by victories in gymnastics, swimming, and climbing. It might appear that it will soon sweep the entire list of events… until you realize that it has not yet attempted any sport that involves interaction with an opponent (tennis), teammates (volleyball), or direct physical contact (wrestling). You then realize that the robot is completely reliant on preprogrammed plans, without any ability to react on the fly; that it has no concept of anticipating another’s movements; and that it does not know how to communicate. Suddenly an “artificial general athlete” looks much farther off.

In previous posts, I’ve gestured at new categories of capability, such as long-term memory, that may not emerge from straightforward scaling of current systems like GPT-4 and o1. But the real challenges may be things that we can’t easily anticipate right now, weaknesses that we will only start to put our finger on when we observe Claude 5 or o3 performing astonishing feats and yet somehow still not being able to write that tightly-plotted novel.

We’ve already seen at least one example. Arguably, in the last 20 months, the biggest news in the “intelligence” part of artificial intelligence was o1’s ability to sustain an extended chain of reasoning. That didn’t fall out of increased scale; it required the introduction of a new training approach, rumors of which had been circulating for a long time under code names like “Q*” and “Strawberry”. More breakthroughs of this nature may be needed; I’ve been saying as much since I first fell down the AI rabbit hole early last year3. And we may need to wait until we bump up against the limits of the current approach before we’re even able to identify the fresh challenges that await.

The Pace of Progress Really Is Amazing

None of this is meant to take anything away from the capabilities of current AI systems. The pace really is quite astonishing.

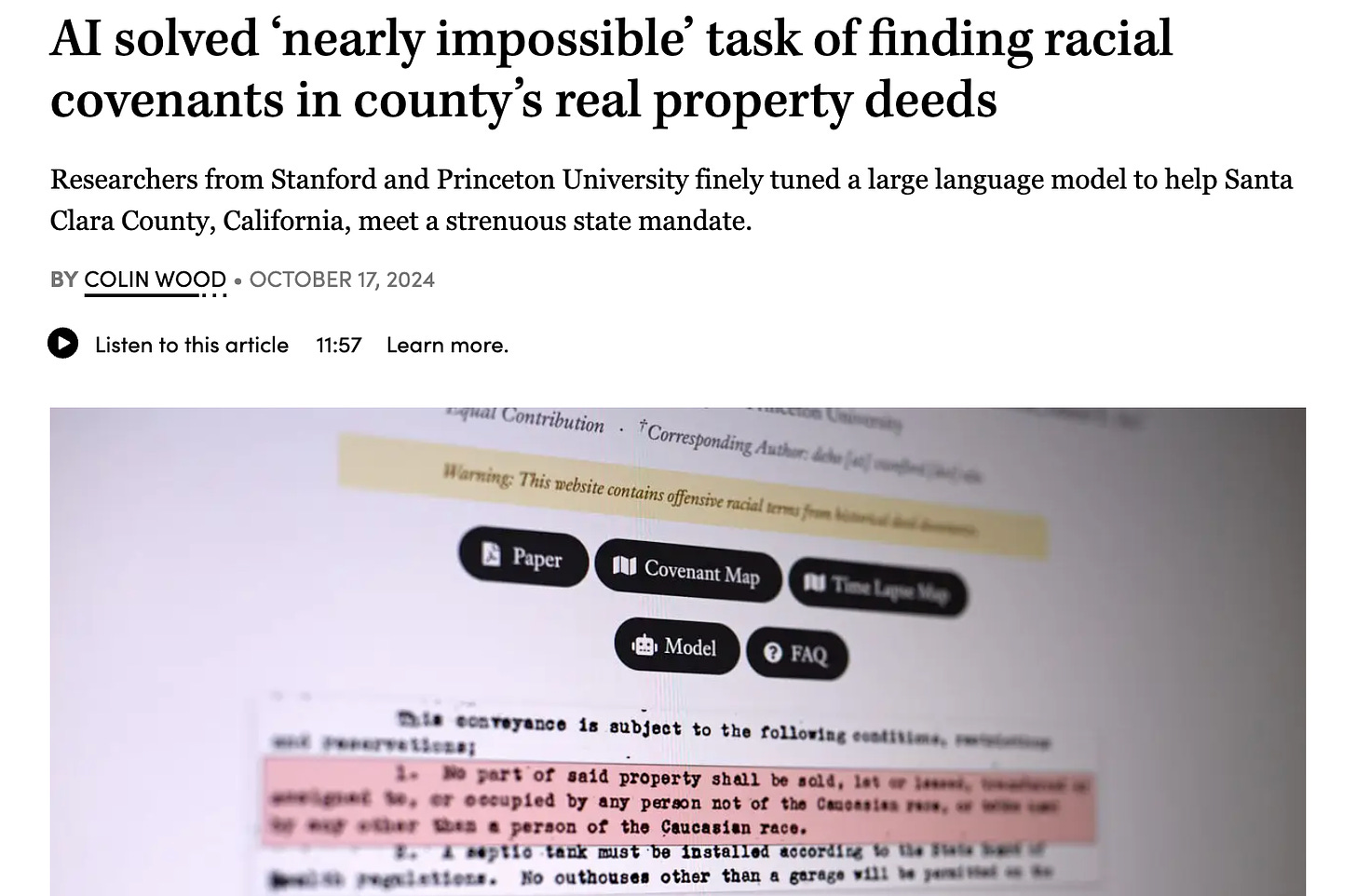

People are already accomplishing amazing things with AI. For instance, identifying all of the illegal racial covenants in an entire county by sifting through 84 million pages of property records in a few days, exporting data from a set of email messages by simply recording a movie of the computer screen, and writing 25% of all new code at Google4. The models are going to keep getting better – from OpenAI alone, we don’t yet have the final release of o1, and GPT-5 and o2 both appear to be in the pipeline. Equally important, we are still in the early days of figuring out how to make practical use of the AI models we already have. If foundation model development stopped today, we’d be a decade finding all the applications5. Even in this pre-AGI stage, AI will have a larger impact than many people realize.

What About, You Know, AIs Making Smarter AIs?

There’s a lot of talk about AIs being used to advance AI research. Already, engineers at the big AI labs are using code-writing tools to offload tedious work. Google’s AlphaChip “has been used to design superhuman chip layouts in the last three generations of Google’s custom AI accelerator”. OpenAI is reportedly using o1 to generate training data for GPT-56.

On our current path, might we build an AI that is smart enough to sprint ahead with its own development, without requiring further (human) breakthroughs? Conceivably, but I doubt it. As I wrote last year, this “recursive self-improvement”, or RSI, will not necessarily be the slam-dunk route to an intelligence explosion that some folks expect.

Even if RSI does eventually lead to a dramatic takeoff, we are likely several breakthroughs away from the launch point. For AIs to play the leading role in their own improvement will require them to achieve exactly the sort of creativity, insight, and generally broad coherent competence that appears to require new techniques like o1 – techniques that, for now, still require human ingenuity.

Riding A Speedboat Across the Pacific

People usually frame AI progress in terms of a single variable: the rate of progress. They think of AI as either a speedboat, rapidly crossing a lake; or something more like a rowboat, whose tired occupant, after an initial burst of speed, still has a long journey ahead. I think a better analogy would be a speedboat crossing the Pacific Ocean.

Current AIs are both astoundingly useful, and limited in many ways. The ocean we call “intelligence” is wider than we can easily appreciate, and will take a long time to cross. Many exciting adventures await us along the way.

Thanks to Andrew Miller, Lauren Gilbert andRob L'Heureux.

An argument which looks a bit shakier since the recent release of OpenAI’s o1 model.

See, for instance, Toward Better AI Milestones and The AI Progress Paradox.

For example, in I’m a Senior Software Engineer. What Will It Take For An AI To Do My Job?, back in April 2023 and only the second post in this blog:

I don’t know whether my job will eventually be taken by an LLM or something else. But either way, I expect that it will require a number of fundamental advances, on the level of the “transformers” paper that kicked off the current progress in language models back in 2017.

Note: I presume that this 25% is mostly routine boilerplate code, and constitutes much less than 25% of the value added by Google’s engineering team. Also, actual writing of code is only a part of what “coders” do. But still!

Thanks to Rob L'Heureux for this observation.

Or at least, for an upcoming model, which might or might not be released under the name “GPT-5”.

As usual, a great post! I'm curious what you think about https://sakana.ai/ai-scientist/

Your overall point is a fair one, but the specific examples don't do it as much good as I'd like for it to be fully compelling. Most of the things you cite as unattainable by current AI - managing a school classroom, troubleshooting an underperforming team, reminiscing with an old friend - are not really limitations of AI or current models but other challenges that we could likely solve functionally with other technology, rules construction, granting of access to existing data, granting of authority, etc.

In a school context you put a robot or terminal in there and introduce it, likely a one-time necessary transition (you would probably want to introduce a new teacher too): "Class, you need to obey the robot on this screen in the classroom. We've installed cameras and microphones in hidden locations throughout the room, it knows what you're doing at all times and will instruct and discipline you." You might argue that the robot can't *do* anything to discipline, but a real teacher's abilities there are highly limited without corporal punishment too. And in fact an AI that the students know is recording the entire classroom might well be *more* well-obeyed than an average teacher. Regardless, little to none of the challenge in this scenario has to do with AI "intelligence" or advancement. We have highly sophisticated image recognition and analysis (see self-driving cars like Waymo), we can train it on a library of videos of kids behaving and misbehaving, it can recognize kids by name with current tech, it can communicate instructions, etc, etc. It might not go great, but it wouldn't fail on a fundamental level, and direct tuning of the AI for that scenario based on real-world performance would likely yield very good performance within a generation or two.

Troubleshooting an underperforming team? Give the AI access to the email server and Slack history, identify the accounts/individuals in a given team (or let the AI analyze the provided info and figure it out, it probably could!), then have in its prompt to "determine issues of communications, performance, etc. that are impeding progress toward goals and overall success of the team", and it'd probably do just fine. I would in fact argue that due to 1: deep access, and 2: ability to operate at scale well beyond humans, 3: lack of emotional bias (it has other biases, but not "emotional" ones) it might actually do *better* than an average human manager. Human intuition for a *skilled* manager might do better, but it's at least worth challenging it. 😁

Reminiscing with an old friend? Obviously not a technical limitation but one of history and information. But hey, let's see what we can do. We have to assume that the AI needs to "know" the relationship in order to do this, so access to any historical information is essentially a given (this is a necessary condition for the prompt, so we can fairly ignore the cultural/social discomforts associated). With that in mind give the AI your complete sms/imessage/email/chat history with this person, along with any photos (AI can extract location and date for e.g. travel together, context from visual analysis, do facial recognition for determining other people commonly with them, etc.). I bet it could do a decent job, especially with the latest voice synthesis tech.

I'm not saying these things are trivial or would be done any time soon or ever (e.g. being comfortable giving an AI full-time audio access to your conversations with friends and your past email and texts with them, etc.), but again those are not really technical limitations as much as cultural/social, etc. All of these things and your other examples as well are amenable either to non-AI-advancement solutions, or seem pretty likely to be solved with simply the will and desire to do so, and the cultural willingness to do what's necessary to accomplish that (e.g. give an AI control of a classroom of kids). Once you've decided you are even *willing* to solve a problem with AI, you can then optimize for that, and current tech seems largely able to handle most of this to me. So I think your point is probably correct, but your case for it feels like it needs some more development, particularly (for me) around compelling scenarios where AI intelligence is the limiting factor.