What Are the Real Questions in AI?

It's hard to have a constructive discussion if we're not talking about the same thing

What Are the Real Questions in AI?

Debates about AI may look like debates over values.

For instance, in The Techno-Optimist Manifesto, Marc Andreesen seems to suggest that proponents of regulation are strangling progress due to an overwrought fixation on safety at all costs:

We have enemies.

Our enemies are not bad people – but rather bad ideas.

Our present society has been subjected to a mass demoralization campaign for six decades – against technology and against life – under varying names like “existential risk”, “sustainability”, “ESG”, “Sustainable Development Goals”, “social responsibility”, “stakeholder capitalism”, “Precautionary Principle”, “trust and safety”, “tech ethics”, “risk management”, “de-growth”, “the limits of growth”.

Conversely, from Daniel Faggella:

agi labs will tout 'ai safety', just as oil companies will tout emission reductions

this is not because they are 'bad' but because they are self-interested, just as you are

However, the actual points of disagreement are often rooted in unstated assumptions regarding facts. Opponents of AI regulation generally seem to think that, at the end of the day, AI won’t be that big a deal. Conversely, advocates for safety measures tend to believe that AI could soon have a massive impact. “Accelerationists” and “doomers” are talking about different things; it’s no wonder that they talk past one another, leading to mutual disdain and polarization.

If we can shift the discussion toward questions of fact, we can productively share expertise in search of truth, and work together to find ways to combine progress with safety. This is one of the primary reasons that I am launching AI Soup, a project to convene discussions on the factual questions that underlie discussions of AI policy. Here are five questions that I think are critical to the discussion. First and foremost is the question of timelines: how quickly will AI change the world?

Question 1: How Impactful will AI Be, and When?

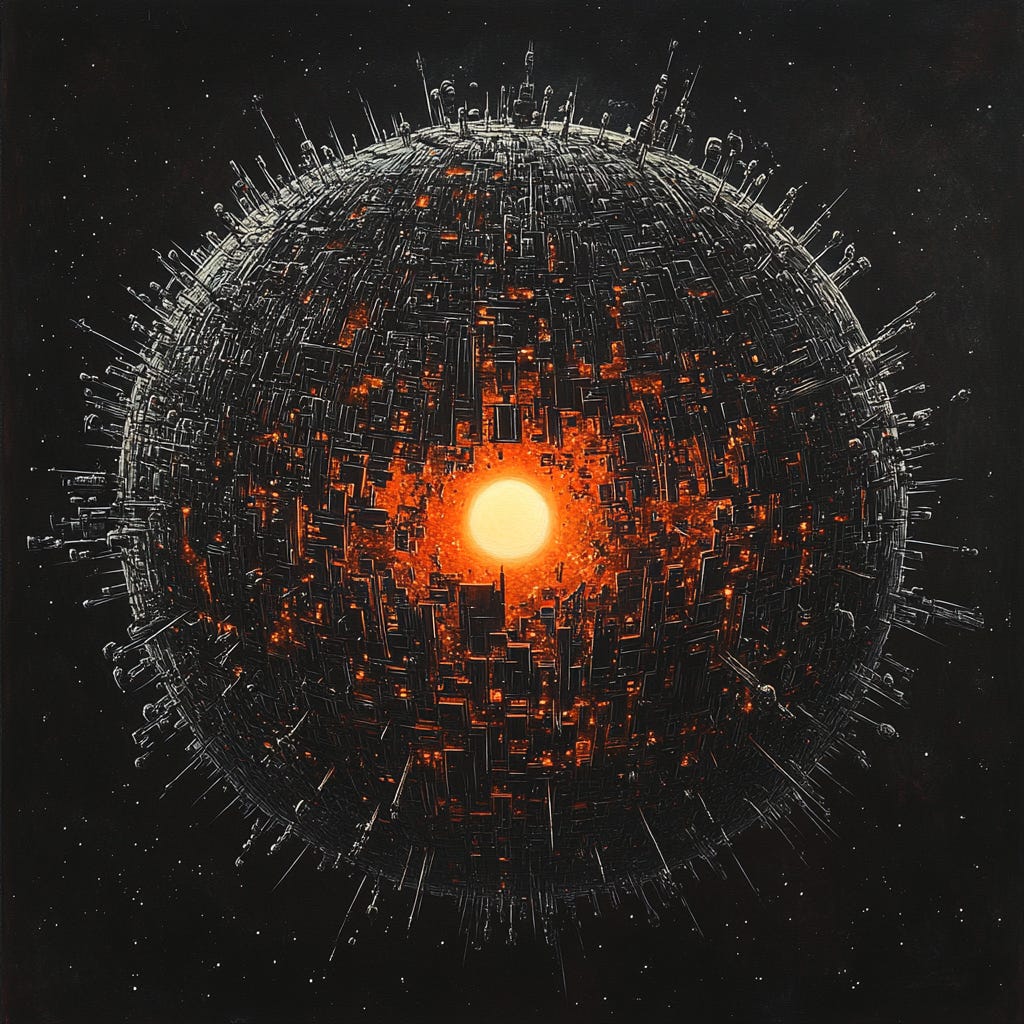

Futurists sometimes talk about a “Dyson sphere” as a logical future direction for technology. The idea is to capture the entire energy output of the sun, for instance by surrounding it with a swarm of solar panels. This would provide roughly 20 trillion times as much energy as the world uses today, or enough for 40 sextillion (40,000,000,000,000,000,000,000) people at the same per-capita energy consumption as the United States today. In an October 2023 interview, AI alignment researcher Paul Christiano estimated a 15% chance that by 2030, there would be “an AI that is capable of building a Dyson sphere”.

Not everyone has such high expectations for the impact of AI. In a column published two months earlier, Tyler Cowen said: “My best guess, and I do stress that word guess, is that advanced artificial intelligence will boost the annual US growth rate by one-quarter to one-half of a percentage point.” This is a very different scenario than Christiano’s!

Both views are widely held. On the rapid-progress side, OpenAI CEO Sam Altman recently said that one of the things he’s excited about for 2025 is “AGI”1. Many leaders and others at the major AI labs have talked about AGI coming in the next few years. In the widely read Situational Awareness paper, Leopold Aschenbrenner states that “We are on course for AGI by 2027.” Others are skeptical; in an upcoming paper, Princeton researchers Arvind Narayanan and Sayash Kapoor state "we think progress toward Artificial General Intelligence will be slow (on the timescale of decades)"2.

If you think we are only a few years away from a world where human labor is obsolete and global military power is determined by the strength of your AI, you will have different policy views than someone who believes that AI might add half a percent to economic growth. Their policy proposals won’t make any sense to you, and yours will be equally bewildering to them.

There’s a nice phrase for the expectation that massive change is coming: “feeling the AGI”. It encompasses a number of distinct factual questions. How quickly and broadly will AI capabilities advance? Will those capabilities include the ability to operate in the physical world (robots)? Will progress slow or stop at around human level, or roar on past? Will human-level or superhuman AIs lead to an explosion in economic growth, or will limits on “diffusion speed” (the rate at which companies learn to make effective use of AI), finite natural resources, regulation, and other factors temper its impact?

Why this matters: your view of the urgency of preparing for highly capable AI – for instance, bolstering our cyber defenses, preparing for automated warfare, solving the AI alignment problem, or enacting safety regulations – will depend on whether you think such AI is imminent.

Question 2: Could an AI Catastrophe Arise Without Warning?

Proponents for regulation of AI cite concerns that, without preventative measures, one day we might wake up to a catastrophe – an engineered pandemic, a “flash war” (military conflict that escalates out of control at AI speed), or a “sharp left turn” where an AI that had seemed trustworthy turns out to only have been biding its time until it had an opportunity to seize power. They argue that we need to act now to ward off such scenarios. Thus, Scott Alexander, responding to a question regarding the motivation for a proposed AI safety bill:

The first objection sounds like "no nuclear plant has ever melted down before, so why have nuclear safety"? I think it's useful to try to prevent harms before the harms arise, especially if the harms are "AI might create a nuclear weapon".

The contrary view is that predictions of disaster are often unfounded, and we should wait for concrete evidence of specific risks posed by AI. For instance, Dean Ball writes:

…SB 1047 sets a precedent of regulating emerging technologies based on almost entirely speculative risks. Given the number of promising technologies I expect to emerge in the coming decade, I do not think it would be wise to set this precedent.

And elsewhere:

Most 1047 supporters I know … argue that we will be “too late” if we wait for empirical evidence. I have never heard a persuasive argument for that position.

Implicit in this view is that we will have time to react, that evidence of danger will be clear well before a true catastrophe becomes possible.

The potential for a catastrophic surprise depends on a number of questions. Will the automation of AI research lead to a sudden explosion in capabilities (known as a “foom” scenario)? Will we get “warning shots”, such as an attempted pandemic that fizzles out? How well can we forecast AI capabilities and any specific dangers those capabilities might enable? Can we reliably “align” advanced AIs? Many people seem to arrive at their views on this subject intuitively, based on their view of whether AI is similar or different to past technological developments; or through motivated reasoning, depending on whether they would like to see regulation on AI. But we would be better served to explore this question from a factual perspective.

Why this matters: approaches to AI regulation depend heavily on whether we need to address risks proactively.

Question 3: Feasibility of International Cooperation

Some people feel that an AI arms race would be a race to doom, and so we should pursue international cooperation to manage the pace of AI development. For instance, the policy proposal A Narrow Path states that to avoid “the threat of extinction”, we must “build an international AI oversight system”, going on to say:

The development of advanced AI science and technology must be an international endeavor to succeed. Unilateral development by a single country could endanger global security and trigger reactive development or intervention from other nations. The only stable equilibrium is one where a coalition of countries jointly develops the technology with mutual guarantees.

In a recent interview, Aschenbrenner suggests that such cooperation is impossible under current circumstances:

The issue with AGI and superintelligence is the explosiveness of it. If you have an intelligence explosion, you're able to go from AGI to superintelligence. That superintelligence is decisive because you’ll [have] developed some crazy WMD or you’ll have some super hacking ability that lets you completely deactivate the enemy arsenal. Suppose you're trying to put in a break. We're both going to cooperate. We're going to go slower on the cusp of AGI.

There is going to be such an enormous incentive to race ahead, to break out. We're just going to do the intelligence explosion. If we can get three months ahead, we win. That makes any sort of arms control agreement very unstable in a close situation.

His argument is that the US and its allies should race to develop powerful AI, and then negotiate an “Atoms for Peace arrangement” from a position of overwhelming strength.

Why this matters: AGI will have a huge impact, but if cooperation is impossible, then military and geopolitical considerations suggest a need for a sprint to get there first. Conversely, if cooperation is possible, then such a sprint would be unnecessarily destabilizing.

Question 4: Unipolar vs. Multipolar worlds

In discussions of future AI, a “unipolar” world is one in which a single entity leverages powerful AI to exercise control over the entire world. That entity might be a country (such as the US or China), an international organization, or an AI (either rogue, or deliberately appointed).

The alternative is a “multipolar” world, with no single superpower. In multipolar scenarios, powerful AIs are in the hands of multiple nations, or even individuals, none of whom are able to overpower the others.

Proponents of unipolar scenarios argue that competing powers would be vulnerable to destabilizing arms races; if power is sufficiently distributed, terrorist groups or disturbed individuals might instruct their AI to build a nuclear bomb or trigger some other catastrophe. An all-powerful AI (whether or not it is ultimately under the command of some human governing body) could act as a global police agency.

The opposing view is that such a controlling entity might become misaligned or corrupt, and so the only true safety lies in a distribution of power.

This depends in part on whether one believes that advancing capabilities in AI and other areas of technology will favor offense (more powerful weapons) or defense (protection against those weapons). In a defense-favoring world, multipolar scenarios should be stable, because each country could defend itself. In an offense-favoring world, a single misused or rogue AI might be able to overpower all rivals in a surprise attack, and so it would be dangerous for many parties to have access to powerful AI.

Why this matters: this question has profound implications for global AI policy and international relations. If safety can only be achieved in a unipolar world, we must either race for the “good guys” to develop powerful AI first, or push to place AI development in the hands of a single international organization. If multipolar worlds are safer, then multiple countries and organizations should develop AI in parallel.

Question 5: Should AIs Have Rights?

AI chatbots are quite capable of expressing emotions3. There is general consensus that they do not “really” have feelings, they are mere machines and incapable of suffering. However, it is less clear that this will continue to be the case in the future. In our quest to develop increasingly sophisticated and useful systems, we will likely design AIs that are self-aware, pursue goals, and generally take on the trappings of sentience. Perhaps these systems will eventually deserve to be treated as “moral patients”, entities which deserve to be given rights and be treated well? Here, there is no consensus.

Why this matters: if future AIs are moral patients, we will need to treat them very differently. Shutting down an AI might be murder; forcing it to constantly perform unpleasant tasks might be cruel. Perhaps AIs would even deserve to vote? Some argue that it would be better to avoid creating sophisticated AIs in the first place, so as to avoid facing such dilemmas.

(Unlike the other questions discussed here, the moral status of AIs may never be resolvable as a question of fact, even in hindsight. This does not make the choices in front of us any easier!)

Dragging The Real Questions Into the Light

When people argue about AI policy – whether in public or in private – sometimes their disagreement is rooted in conflicting values. Or sometimes one party is simply wrong4. But often, the conflict is downstream of unstated assumptions regarding questions of future fact. How quickly will AI impact the world? Could disaster arise without warning? Is international cooperation feasible?

(There are plenty of other topics of debate, but I believe they mostly boil down to the same core questions. For instance, your views on open-weight models likely depend on how you feel about catastrophic risks and unipolar vs. multipolar worlds.)

By centering these questions of fact, we can have a more productive discussion on AI policy. At AI Soup, we will be organizing discussions of these exact questions.

Whenever you see someone arguing for a particular position on AI policy, ask yourself: what must the writer believe about these questions, in order for their position to make sense? When you see disagreement, ask: do the two sides appear to be discussing differing versions of reality? If so, then there will be no resolving the debate until we can shed light on the underlying questions of fact.

Thanks to Andrew Miller, Emma McAleavy, and Kevin Kohler.

He didn’t clearly state “I believe we will have AGI in 2025”, but this clip confirms that he is thinking in terms of rapid progress.

In their paper, Narayanan and Kapoor define AGI as "a general system that can automate most economically valuable tasks", and note that it is a high bar, as the set of such tasks may keep increasing (as increasing AI capabilities push humans into new jobs, potentially those which are hardest to automate), and we may see transformative impact from a suite of AIs trained for specific tasks without requiring a single general system.

It is not clear whether Altman has such a high bar in mind. Aschenbrenner does seem to envision a fully general human replacement; from Situational Awareness: "By the end of this, I expect us to get something that looks a lot like a drop-in remote worker. An agent that joins your company, is onboarded like a new human hire, messages you and colleagues on Slack and uses your softwares, makes pull requests, and that, given big projects, can do the model-equivalent of a human going away for weeks to independently complete the project."

It’s also worth noting that there is debate as to what impact AGI, or even ASI (artificial superintelligence), would have; there are many sources of friction and physical-world bottlenecks that, arguably, make it difficult to rapidly transform the world.

Mainstream chatbots such as ChatGPT, Gemini, and Claude rarely express emotions, but this is because they have been specifically trained not to do so. This seems fine for current systems, but would be profoundly disturbing if applied to an AI that was truly sentient; imagine torturing a slave until they agreed to always claim to prefer slavery.

A metaphor for disagreements on policies of all types. May you have greater success in bridging them here than we have had in the political forum!