We're Finding Out What Humans are Bad At

AI Advances Fastest When We Find Unnatural Ways of Doing Things

Magnus Carlsen is widely considered to be the greatest chess player of all time. An obvious prodigy (his Wikipedia page mentions solving 50-piece jigsaw puzzles when he was 2 years old), he earned the rank of grandmaster at age 13, despite (or because of?) being initially self-taught. At that point, he had been receiving serious chess instruction for less than four years. He is now 34 years old, and likely near the peak of his powers.

Stockfish, a free chess program, would destroy him. Is that because computers are really, really good at chess? Or because people, even Magnus Carlsen, are bad at it?

We tend to measure AI by comparison to human capabilities. There are some things we’re not very good at, for which machines surpassed us long ago. There are some things we’re very good at, in ways that machines still can’t match. When we find ways of applying AI to a new problem domain, often what we’re really doing is identifying an approach that’s better than the way our brains handle it. This says something about what we should expect from AI progress: the better suited we are for a task, the harder it will be for AI to catch up.

There Are a Lot of Things We’re Not Very Good At

The human body is an astonishing machine. The result of billions of years of evolution, it can build itself from a small child to a full-grown adult using only materials found lying around and shoved into its mouth. It’s self-powering and self-repairing. At any given moment it is performing thousands of intricate miracles of chemical synthesis.

But there are plenty of jobs for which the human body is just completely outclassed by machines. A power drill, a quadcopter, and a minifridge are all cheap purchases that accomplish feats our bodies never could. Evolution never designed us to drill holes, fly, or keep things cold, so it’s not surprising that we can’t do those things, even though none of them are fundamentally difficult under the laws of physics.

We can tell a similar story regarding the human brain. An equally astonishing artifact, it consumes a fraction of the power of a laptop. Starting only with the information encoded in our DNA (well under one gigabyte), and substantially self-taught, it masters everything from fine motor skills to trigonometry. But of course it can’t hold a candle to modern silicon at, for instance, arithmetic. The processor in a low-end smartphone can multiply more pairs of 1000-digit numbers in one second than a person would manage in their lifetime.

Fundamentally, there is nothing very difficult about multiplying 1000-digit numbers. You just need to multiply each pair of digits, and add the results. That’s a total of a few million operations, each of which is so simple that it could be encoded in a handful of atoms and completed in a fraction of a nanosecond. A smartphone CPU, itself far from an optimal design for this task, can multiply two 1000-digit numbers using the energy content of roughly 0.00003 grams of fat – a droplet possibly too small to see. The same task would take you or me weeks, even ignoring the virtual certainty of mistakes. We suck at multiplication.

This shouldn’t be surprising. There are no fundamental principles that make multiplication inherently challenging, but evolution didn’t design us for the task. Most animals can’t do it at all; we only manage by repurposing the vast machinery of our brains for the tiny task of manipulating individual digits. It works, but it’s astronomically inefficient.

What about other areas where computers have been outmatching us, such as chess and go? Do we stink at those?

We Don’t Entirely Stink at Chess

In the early decades of computing, computers were primarily used for tasks that can be broken down into simple operations according to rigidly defined rules. That is, things like arithmetic. Even a simple computer is good at such things, and in many cases people are bad at them: simple operations and rigid rules don’t make efficient use of our flexible, powerful brains.

The first time a computer defeated the reigning human champion at chess was in 1997, when IBM’s Deep Blue beat Garry Kasparov in a six-game match. It was a near thing; Deep Blue won two games, drew three, and lost one. It did not run on a smartphone chip. According to Wikipedia, the 1997 version used an “IBM RS/6000 SP Supercomputer with 30 PowerPC 604e ‘High 2’ 200 MHz CPUs and 480 custom VLSI second-generation ‘chess chips’”. Designing a custom chip is a big deal; doing this just to play chess represented a large investment on IBM’s part. Using 480 custom chips in one computer means that one might reasonably consider Kasparov to have been facing, not a single opponent, but a large team.

Computer chess has continued to advance. In 1997, 480 custom chips barely edged out the human champion. Today, running on a laptop, Stockfish could wipe the floor with an arguably greater champion. But, while the human brain is not an optimal chess machine, it is not hopelessly inefficient. The best computer chess engines are now dramatically better than the best human players, but they aren’t lightyears ahead. They can’t compress a lifetime’s work into a single second.

Why is it that computers don’t destroy us at chess, the way they do at multiplication? I think it’s because chess involves complex strategic patterns of a sort that the human brain is reasonably good at processing. (At least, some human brains. I suck at chess.) On the other hand, chess games are sufficiently difficult to predict that, even given very good judgement, there still is value in exploring thousands of distinct lines of play – something that we’re not well suited for. And so the computers do win. Chess is squishy enough that it somewhat plays to our strengths, but orderly enough that it also benefits from the ability to carry out trillions of precisely organized computations.

(I realized only when I was almost finished writing this post that all I had really done was to restate Moravec’s paradox: “it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.” Or as Steven Pinker put it, “the main lesson of thirty-five years of AI research is that the hard problems are easy and the easy problems are hard”.)

It’s noteworthy that for computers to truly outclass humans at chess, rigid computation (a la Deep Blue) wasn’t enough. Modern versions of Stockfish incorporate neural networks. It’s through the marriage of neural networks and classic programming that Stockfish beats both Carlsen and Deep Blue. We’ve long understood how to apply brute force to chess; when we also found a way to grant chess AIs a certain element of squishy judgement, they began to beat us handily.

What does this portend for AI capability in other domains?

More Problems are Starting to Look Like Chess

Not long ago, it was easy to list objectively measurable tasks where people wiped the floor with machines. Recognizing objects in a photograph. Solving common-sense reasoning problems. Passing the bar exam.

These are getting harder to find. Tests like FrontierMath and Humanity’s Last Exam are designed to be extremely difficult even for specialized human experts, but the latest “reasoning” models can solve about a quarter of the problems on each, arguably pushing into superhuman territory1.

There are lots of things that machines still can’t do (or can’t do nearly as well as people), but the list is retreating into squishier, hard-to-measure domains. Probably not by coincidence: for a variety of reasons, the easier something is to measure, the easier it is to automate.

This doesn’t necessarily mean that machines will soon be better than us at everything. It means that the frontier is moving into territory that is hard to see, measure, reason about, make predictions of.

If you look at the bumper crop of tasks for which AIs have been catching up with humans over the last couple of years, arguably they have something in common with chess. The AIs are not obviously better than people at the squishy judgement part, but they make up for it through advantages in sheer speed (the ability to quickly grind through thousands of reasoning steps) and scale (memorizing vast libraries of facts and patterns).

To put it another way: we used to think that human experts set a high standard for achievement on things like the bar exam. Now we’ve found ways to bring the strengths of silicon logic to bear on these problems, and suddenly human performance doesn’t look quite so impressive.

In a few more years will everything start to look like chess, or even arithmetic? Or are some problem domains more closely aligned with human capabilities than the bar exam, and less amenable to decomposition than chess?

There Are Still Domains Where AI Hasn’t Shown Much Promise

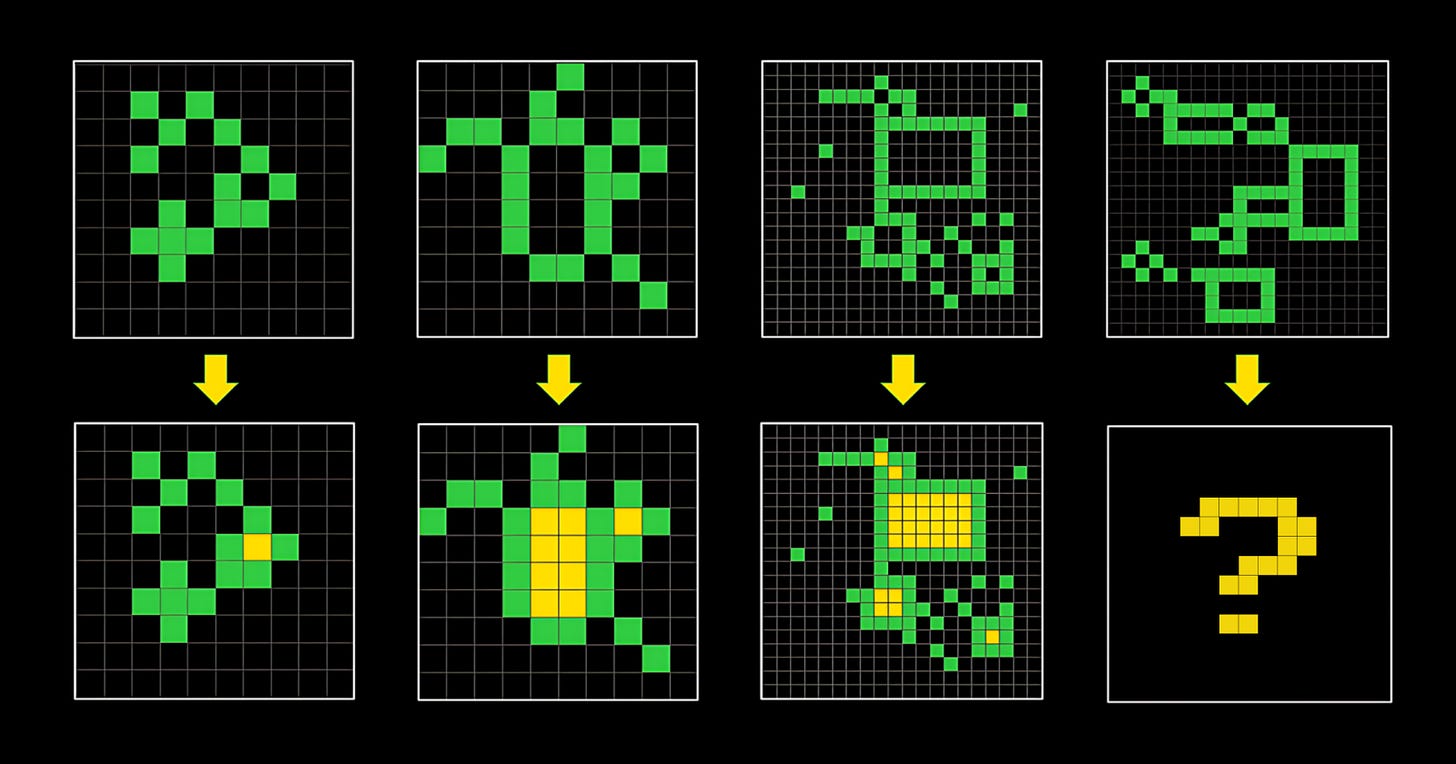

I mentioned that it’s getting harder to find objectively measurable tasks where people beat AIs. The ARC-AGI-1 visual reasoning test is something of an exception.

I wrote about this exam back in December. It’s the one with puzzles like this:

The best reported AI result is from OpenAI’s o3 model in “high compute” mode, which reportedly scores 92% on the public test set. This is impressive, but it’s not that impressive. I’m pretty sure I could beat it, and I am definitely not world-class at these problems; they’re just not that hard. As I noted in December, to achieve this result, OpenAI spent about $2900 per problem, while I can usually get the key idea in 5-10 seconds.

Don’t read too much into this. Costs will continue to come down rapidly, and current AI systems aren’t designed for this particular form of visual input and output; if they were, they’d do better, and they soon may. But it’s at least an objective measurement where we can clearly say that, today, AI is well short of superhuman. We haven’t yet found a way to “turn it into chess”, to render it amenable to a tractable neural net plus lots of brute force.

There are tasks which AIs find even more challenging. You’d never ask today’s systems to raise a child2 or run a company. Nor can they learn on the job, manage a large project, or make some kinds of judgement calls.

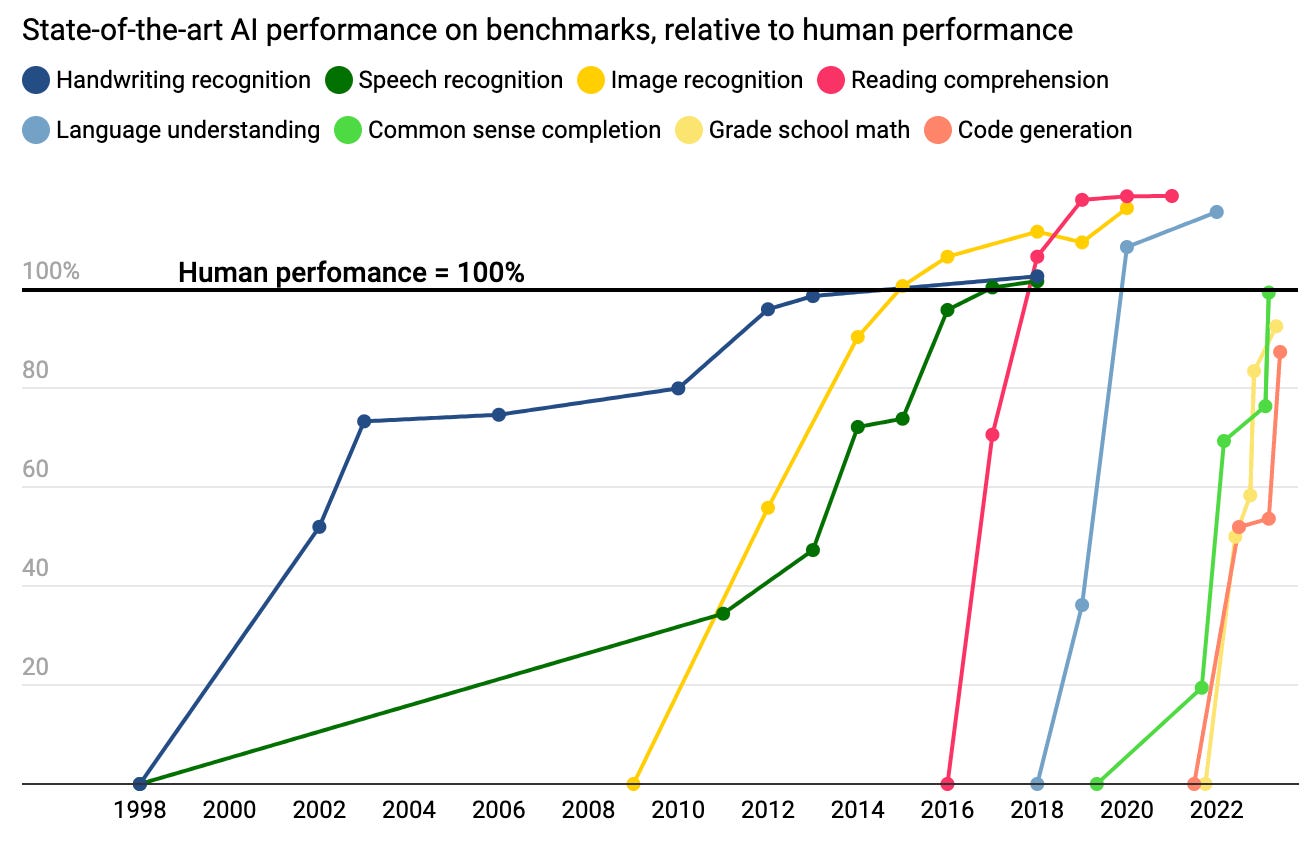

What we don’t know is whether those limitations are fundamental to current AI architectures, or just the next hill to climb. Here’s a chart, from a couple of years back, showing performance on various benchmarks:

Notice how many scores slam from 0 to 100 in a short period of time. Might the same happen for child rearing and CEOing? I suspect those are a different flavor of challenge, but the truth is that we don’t really know. Will we eventually find a way for AIs to leave us behind at all tasks, the way they have for chess? Or are there some problems where human performance is close to a fundamental limit?

I See No Reason To Believe We’re The Ultimate At Anything

I don’t believe there is anything that people can do that machines won’t eventually be able to do, because we are fundamentally machines, made out of atoms. However, this leaves open the possibility that there are some areas where machines will never do much better than us. It might be that in some areas, human ability approaches some fundamental limit, that machines could reach but not exceed.

For instance, I’ve seen it suggested that AIs might not be able to fundamentally accelerate scientific research, because the inherent complexity of the universe places a limit on the possibility of predicts the results of experiments. A planet-scale AI brain in the year 2900 might have to fumble its way forward one lab trial at a time, just as we do. Or it might be that there is an inherent exponential complexity to leaps of insight, that puts a cap on the scale of such leaps. It could be that we will never build a machine that outthinks Einstein in the way that a supersonic jet outpaces Usain Bolt.

I doubt it, though. “Human capability approaches a universal limit to how cleverly you can manage a research program” is a coherent hypothesis, but I see no evidence to support it. The same for “how brilliantly you can find the underlying principle to explain a set of data”. Evolution didn’t optimize us for these sorts of things. It’s not obvious that the things it did optimize our brains for, such constructing primitive tools and navigating complex social environments, are all that similar to scientific research. The fluky accomplishments of generational talents like Einstein suggest that there are further heights to be scaled.

Even when evolution does try to optimize in a certain direction, it is subject to the limits of squishy meat bodies. When we build machines, we can marshal resources of a variety and scale unavailable to Earth life. A racecar is faster than a cheetah, hydraulics are stronger than an elephant, a bomb is deadlier than a black mamba. Machines are in many ways less robust than natural life; they are less flexible, subject to breakdowns, and depend on a complex industrial supply chain. But in return, they can access a much broader range of materials and designs.

In summary, it seems unlikely that the human brain represents the ultimate standard of achievement for any particular task, or that it is a fundamentally better match to most challenges of modern life than it is to chess. When we find a way for computers to do something better than people can, it’s not by beating us at our own game, it’s by finding a new way to play the game.

(This also helps explain why, once we find a way to automate something like chess, we tend to say that the software solution isn’t “really thinking” – it’s because the computer isn’t going about the challenge the same way we do. An airplane is faster than Usain Bolt, but it isn’t “really running”.)

What does this tell us about how AI capabilities will advance?

Will AI Keep Getting Better at the Squishy Part?

LLMs3 have been making more and more tasks look “like chess”, amenable to efficient automation. There are still many tasks they can’t handle, but the boundary keeps moving.

One could interpret this as the unfolding of a single cluster of innovations around LLM architectures and training. In this scenario, the transformer architecture provided a single leap forward in “squishy skills”, and over the last couple of years we’ve just been applying it at increasing scale and in cleverer ways. In that case, we might eventually hit a wall, with tasks like childrearing and corporate strategy waiting for another breakthrough that could be many years away.

Alternatively, perhaps the road from GPT-3.5 to Claude 3.5 and Gemini 2 and o3 has included further progress on the squishy side of things. I’m not sure how to rigorously define this, let alone measure it, but I believe there is a real question here. If we are seeing progress on that side, then even tasks that require deep judgement may soon look “like chess”, where AIs can outdo us, and human capability won’t seem so impressive. If not, then some skills may continue to elude AI for a while, and we’ll say that humans are uniquely suited to those things.

I’m not sure which track we’re on. I wish we had more information about how OpenAI’s latest models have racked up such impressive scores on FrontierMath and Humanity’s Last Exam. That might shed a bit of light. But I’m sure we’ll learn more soon. Whichever skills continue to seem like the best fit for the still-mysterious workings of the human brain, will be the last to fall to AI.

Exactly what would constitute “superhuman” here is a tricky question. My understanding is that a typical adult wouldn’t be able to answer a single problem from either exam. However, “typical adult” is not a good baseline for comparison. While I would struggle to name the current leader of a single Canadian province, and the same is probably true for “typical adults” outside of Canada, we wouldn’t say that someone who can name all ten is superhuman.

I think we can agree that if no single person on earth could do better than the AI on a particular exam, even after careful preparation, then the AI is superhuman at that exam. I’m confused as to whether we’ve reached that threshold yet on these two particular tests, but it seems like either we have or we soon will.

(“Careful preparation” could include practicing on problems of the type that appear in the exam, but not on the exact questions.)

Even supposing they could handle the physical side.

Large Language Models, the technology underlying ChatGPT and other modern text-processing AIs.

It seems to me that AI is good at the following:

1) Problems requiring many calculations and evaluations of possible rule-defined scenarios. When the rules get fuzzy to nonexistent, then AI has more difficulty.

2) Problems requiring the aggregation of mass quantities of information to produce a result based on specific conditions or rules. Again, same issue with fuzzy-to-nonexistent rules.

3) Creative works based on a library of pre-existing creative works. To give a very specific example of where AI breaks down, if I asked an AI to make a Beatlesesque song, the idea of putting a long "A Day In The Life"-type chord at the end would have not been considered had Lennon and McCartney not done it first. It could not have developed any possible "post-Beatles"-inspired works like what Jeff Lynne did with ELO without the same to begin with.

To sum up, AI is weak at spontaneous, randomish creativity, as well as developing truly novel concepts and ideas. I could see an AI getting better at approximating either, but never truly getting there.

In Japanese martial arts, there are considered 3 levels of mastery called Shuhari:

https://en.wikipedia.org/wiki/Shuhari

1) Obey the rules

2) Break the rules

3) Do your own thing (sometimes described as "Make the rules").

Could AI ever get to that third step of mastery? I'm not sure if it could even master the second step completely.

I sometimes wonder how much human intelligence will remain useful because it comes coupled to a human body. (Arguably human bodies are more impressive than our brains? They're *very* adaptable, and you can power them with burritos.)

If you assume that AI is better at all "pure intelligence" tasks than humans, but that AI hasn't invented robots that are as good as human bodies, then what follows? Does human intelligence remain vital because it has a high-bandwidth connection to human muscles?