If AGI Means Everything People Do... What is it That People Do?

And Why Are Today’s "PhD" AIs So Hard To Apply To Everyday Tasks?

“Strange how AI may solve the Riemann hypothesis1 before it can reliably plan me a weekend trip to Boston” – Jack Morris

There’s a huge disconnect between AI performance on benchmark tests, and its applicability to real-world tasks. It’s not that the tests are wrong; it’s that they only measure things that are easy to measure. People go around arguing that AIs which can do everything people can do may arrive as soon as next year2. And yet, no one in the AI community has bothered to characterize what people actually do!

The failure to describe, let alone measure, the breadth of human capabilities undermines all forecasts of AI progress. Our understanding of how the world functions is calibrated against the scale of human capabilities. Any hope of reasoning about the future depends on understanding how models will measure up against capabilities that aren’t on any benchmark, aren’t in any training data, and could turn out to require entirely new approaches to AI.

I’ve been consulting with experts from leading labs, universities and companies to begin mapping the territory of human ability. The following writeup, while just a beginning, benefits from an extended discussion which included a senior staff member at a leading lab, an economist at a major research university, an AI agents startup founder, a senior staffer at a benchmarks and evals organization, a VC investing heavily in AI, the head of an economic opportunity nonprofit, and a senior technologist at a big 5 tech company.

What Is the Gamut of Work?

Most contemplated impacts of AI involve activities that, when done by people, fall under the heading of paid work. Advancing science? Generating misinformation? Answering questions? Conducting a cyberattack? These are all things that people get paid to do.

Of course, if and when an AI acquires the skills needed for some job, that doesn’t necessarily mean that we should or would give that job to the AI. People may prefer to talk to a human therapist, or to know that the song they’re listening to came from a human throat. And we may choose to preserve some jobs because people derive meaning from doing that work. But we can still use jobs as an intuition pump to begin enumerating the remarkable range of human capabilities.

Studies of AI performance in economically relevant activities center on a few specific jobs and tasks, such as writing computer code. But there is enormous variety in the kinds of things people do. Just to name a few: therapist, tutor, corporate lawyer, advice nurse, social worker, call center worker, salesperson, journalist, graphic designer, product designer, engineer, IT worker, research analyst, research scientist, career coach, manager, and CEO.

Of course, a complete list would go on for pages. And I’ve only mentioned paid jobs, with no substantial physical component. What I’ve tried to do here is to sample the range of non-physical jobs, touching on different kinds of work that require different sorts of skills. Even so, I’m sure there are gaping holes, and I’d appreciate help in filling them in. What jobs require capabilities or aptitudes (not just domain knowledge) not touched on here?

In any case, simply listing jobs doesn’t tell us anything about AI timelines. The next step is to ask: could current AIs do these jobs unassisted? Where would they struggle? Again, the goal is not to hasten human obsolescence; it’s to shine a light on capabilities that are missing from current AIs.

Where Might Current AIs Struggle?

Why, exactly, can’t AI plan a weekend trip? Where would it go wrong? And what other tasks are beyond the reach of current systems? Here are some real-world challenges that current and near-future AIs seem likely to struggle with.

Building a successful business. Mustafa Suleyman, co-founder of DeepMind and now CEO of Microsoft AI, has proposed a “new Turing test”: turning $100,000 in seed capital into $1,000,000 in profit. Some challenges for AI:

Judgement: selecting a good, differentiated idea. Deciding when to stay the course and when to pivot. Setting priorities and allocating resources.

Hiring: interviewing candidates and selecting the best fit for a job.

Avoiding being cheated / scammed: it might be easy to scam the AI in various ways, especially if it were “outed” as an AI.

Managing long-term context: keeping track of everything that has taken place over the course of the business, learning from experience, and juggling activities that take place on different timelines.

Planning a child’s summer activities (proposed by Kathy Pham). This requires understanding their interests, sorting out camp availability, and coordinating with the schedules of friends, family, and other caregivers. Some ways this might go wrong:

Consultation and input management: managing the conversations with everyone involved – what information to ask for, how to ask, when to follow up. When to make assumptions and when to verify. Maintaining coherence over an asynchronous conversation.

Detail-orientation and complexity: tracking hundreds of constraints (camp location, start times, end times, ages accepted, vacation schedules, etc.); proposing solutions that optimize for the factors the clients care about most, without making any errors.

Writing a high-quality blog post about a specified topic.

Insight: synthesizing facts into a crisp, memorable insight that brings the detailed picture into focus for the reader.

Engaging writing: identify an anchor – such as a topical policy question, or a story from history – that naturally fits with the ideas being presented. Organize the post around that theme in a coherent, appealing, and natural-sounding way.

Support specialist at an enterprise software company.

Learning on the job: teaching itself to recognize and resolve common problems, and to navigate the knowledge base and other internal resources. Ability to learn about and remember new features and bugs as they are introduced (and resolved) on an ongoing basis.

Getting help: deciding when to escalate, who to get help from, and exactly what help to ask for (making efficient use of the helper’s time).

I believe that current AIs would struggle in all of these areas. This leads to the question of why – what capabilities are required, that existing models lack?

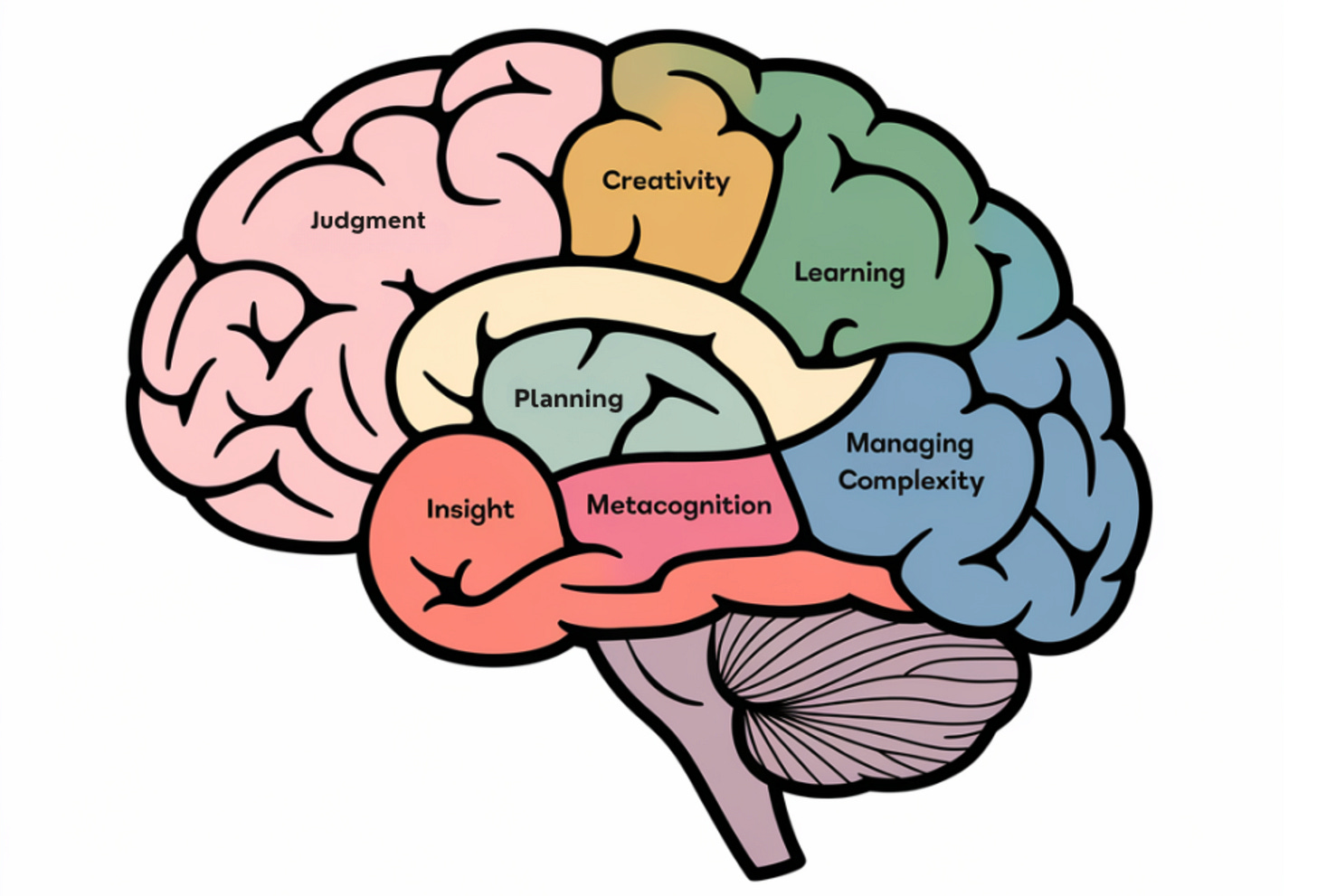

What Are The Key Missing Capabilities?

Progress in AI is often aimed at improving specific capabilities, such as working with more information at one time (increasing the “context window”), or reasoning through a complex task. Here are some capabilities that come up repeatedly when our group talked about where AIs would struggle with real-world tasks.

Managing complexity: Holding the details and constraints of a complex project “in its head” when breaking the project into steps, and again while carrying out those steps. For instance: maintaining characters and detailed plot continuity while writing a novel, or writing code that meshes with a large existing codebase.

(Ethan Mollick: “I have spent a lot of time with AI agents (including Devin and Claude Computer Use) and they really do remain too fragile & not "smart" enough to be reliable for complicated tasks.”)

This requires recognizing that much information is not relevant for any particular subtask. David Crawshaw:

Avoid creating a situation with so much complexity and ambiguity that the LLM gets confused and produces bad results. This is why I have had little success with chat inside my IDE. My workspace is often messy, the repository I am working on is by default too large, it is filled with distractions. One thing humans appear to be much better than LLMs at (as of January 2025) is not getting distracted.

Metacognition and dynamic planning: Stitching together different skills, including high- and low-level planning, to accomplish a complex task. Monitoring conditions throughout the course of a project, and exercising good judgment about when to proceed according to plan and when to adjust course. This should reflect both internal conditions (failing to make progress) and external conditions (the situation has changed).

Metacognition also includes understanding its own strengths and weaknesses, what it knows and doesn’t know. Accurately evaluating and reporting its confidence in a fact or judgement. Seeking outside information / help where appropriate. Noticing when it is getting stuck, repeatedly making the same error, or external conditions have changed.

Learning and memory: “Learning the ropes” of a new job, or assimilating a user’s preferences and circumstances. Incorporate this information (continuous sample-efficient learning) so that the model can act on it in the future as effectively as if it had been in the training data.

(As Soren Larson puts it: “Agents saving users time is downstream of context availability not capability” – like a personal assistant in their first day on the job, an AI agent may not be of much use until it can learn your preferences.)

Leveraging external information: Making effective use of large unstructured information pools: a user’s email, a corporate intranet, the Internet. Intuiting what information might be available; weighting sources by credibility; ignoring extraneous information.

Judgement: Setting priorities and allocating resources. Making good high-level decisions, such as which research avenues to explore. Deciding when and how to push back in a discussion or negotiation; reconciling different stakeholders’ conflicting preferences.

Creativity and insight: Coming up with novel insights, compelling explanatory metaphors, and non-trite storytelling.

Are These Serious Challenges to AI?

That’s a nice list of capabilities. Does it tell us anything useful about the timeline for AGI?

In our discussion, there were a lot of questions about how fundamental these gaps are. For instance, would current models really struggle to “synthesize facts into a crisp, memorable insight”? Could this be addressed with a well-designed prompt? Perhaps some external scaffolding would be sufficient: ask the model for 20 separate insights, and then ask it to pick the best one? Maybe we need to wait for the next generation of models, trained on more data. Or perhaps some fundamental architectural advance will be needed.

You can ask similar questions about any item on the list. What will it take for models to assimilate new information on the fly? To successfully coordinate activities that take place on different timelines? To know when to ask for help?

We didn’t get to dive into every dimension listed above, but there was broad consensus that one of the biggest barriers – one of the last sandbags holding back the tide of AI exceeding human capabilities – will be learning and memory. The expectation was that continuous, sample-efficient learning and sophisticated memory are important requirements for many practical tasks, and that meeting these requirements will require significant architectural advances.

Benchmarks like FrontierMath and Humanity’s Last Exam are very difficult for humans, but don’t capture these fuzzier skills. We don’t know of benchmarks that require, for instance, insightful writing, judgement, or continuous learning.

Open Philanthropy has begun funding work on benchmarks to measure capability at real-world tasks, but the field is in very early days. We hope to explore these questions further. How can we usefully measure an AI’s ability to ask clarifying questions, or to make judgement calls as it attempts to build a business? How can we identify leading indicators for progress in these areas?

We Cannot Predict What We Cannot Describe

People love to speculate as to when AIs will outmatch humans at anything we might want them to do (or fear that they might do). I continue to be surprised at how disconnected these conversations are from any discussion of what those things are and what “doing them” entails. The next time someone predicts that we will soon have AI that can do anything “a human can do”, ask them what they think a human can do. You may find that they are only considering capabilities that are easily measured.

AIs keep racing to 100% on benchmarks, because they are easiest to train on tasks where success is easy to measure. For a complete picture of AI timelines, we need to shed some light on those capabilities that are not easily measured. Please share your answers to this question: what tasks would pose the greatest challenge for current AIs, and why?

Thanks to Carey Nachenberg, Rachel Weinberg, Taren Stinebrickner-Kauffman, and all the participants in our dinner conversation.

A major unsolved problem in mathematics.

For instance, Anthropic CEO Dario Amodei recently put it quite plainly:

An AI model that can do everything a human can do at the level of a Nobel laureate across many fields... my guess is that we’ll get that in 2026 or 2027.

As a mathematician, I am annoyed by the common assumption that proving the Riemann hypothesis *doesn't* require managing complexity, metacognition, judgement, learning+memory, and creativity/insight/novel heuristics. Certainly, if a human were to establish a major open conjecture, in the process of doing so they would demonstrate all of these qualities. I think people underestimate the extent to which a research project (in math, or in science) differs from an exam question that is written by humans with a solution in mind.

Perhaps AI will be able to answer major open questions through a different, more brute-force method, as in chess. But chess is qualitatively very different from math: to play chess well requires much greater calculational ability than many areas of math. (At the end of the day, chess has no deep structure).

Also, prediction timelines for the Riemann Hypothesis or any specific conjecture are absurd. For all we know, we could be the same situation as Fermat in the 1600's, where to prove the equation a^n + b^n = c^n has no solutions you might need to invent modular forms, etale cohomology, deformation theory of Galois representations, and a hundred other abstract concepts that Fermat had no clue about. (Of course, there is likely some alternate proof out there, but is it really much simpler?). It is possible that we could achieve ASI and complete a Dyson sphere before all the Millenium problems are solved-- math can be arbitrarily hard.

Intelligence is substrate agnostic. Machines can demonstrate intelligence and perform any task a human can perform. (To those who disagree tell me where exactly is the intelligence in your brain)?

So what is the difference between a human and AGI? AGI isn't human, humans aren't AGI. AGI can't interact with the universe in a human like way because it doesn't have the same experience(s).

It's like asking you to become a fish for a week. You can replicate a lot of things a fish can do but you will never a fish.