I’ve spent some time recently cataloging intellectual capabilities that LLMs currently lack, such as memory. Today I’m going to discuss another missing capability: insight.

What Does “Insight” Mean, Anyway?

GPT-4 gives quite a good description, which I’ve lightly edited1:

An insight is a deep, accurate, and intuitive understanding of a situation, problem, or concept. It is an “aha moment,” where complex elements are suddenly seen in a new light, allowing for a clearer interpretation or course of action. Insights are generally seen as valuable for solving problems, making decisions, and achieving a deeper understanding of a subject matter.

Insights often emerge from a synthesis of knowledge and thinking, frequently arriving unexpectedly. They can be the result of analyzing data, reflecting on personal experiences, or through collaborative discussions.

In scientific and philosophical contexts, an insight often advances existing knowledge by reconfiguring our understanding of previously collected data or assumptions. For example, Albert Einstein's theory of relativity fundamentally altered our understanding of time and space, offering new insights into the nature of the universe.

In business or daily decision-making, insights often come from the recognition of patterns, such as customer behavior in marketing or inefficiencies in a workflow.

In sum, an insight is not just a piece of information; it is a transformative understanding that enables new perspectives and often leads to effective action or solutions.

I might summarize this by saying that insight provides a succinct explanation for a complex set of facts. Darwin’s theory of natural selection succinctly explains a wide swath of observations regarding the natural world. The heliocentric model of the solar system (i.e. the idea that planets orbit around the sun, not the Earth) explains the complex motion of planets through the night sky. The idea of the “mythical man-month” explains observations regarding schedule delays in software projects.

Insights are valuable because they help us to make sense of the complex world we live in. There are far too many facts to keep track of individually. A good insight allows you to collapse a slew of separate facts into a single, manageable principle.

As part of their training process, LLMs in effect seem to internalize a wide variety of known insights. But I don’t think they are currently capable of generating new insights. This, in turn, will hamper their ability to undertake a wide range of economically important tasks, from scientific discovery to technological innovation to business strategy.

Case Study: DocSend

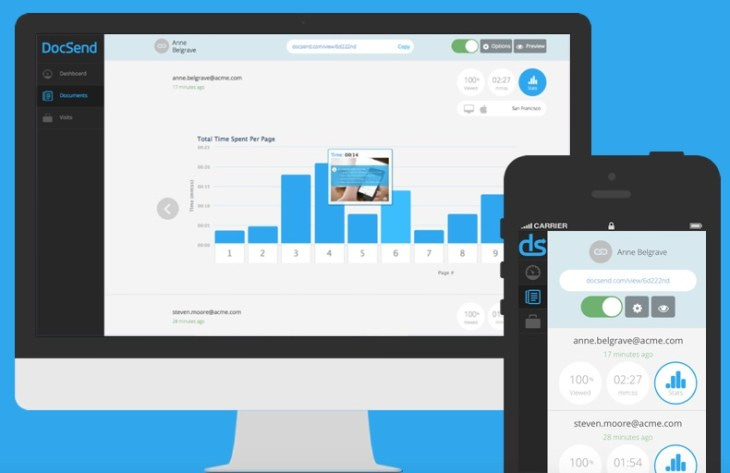

The idea for this post stems from a conversation I had the other day2 with my friend Russ Heddleston, co-founder and CEO of DocSend, the “secure document sharing platform”. A typical use of DocSend is for a startup founder to send their “pitch deck” to a venture capitalist for review. If you’ve never been in this position, you might wonder what’s wrong with just sending an e-mail attachment, so bear in mind that there was enough unmet customer need for DocSend to become a very successful business.

I was struck by Russ’ explanation of the process through which he and his co-founders fleshed out the ideas that led to the company’s success:

The fundamental issue is the power imbalance between the person who is sending a document and the person who receives it. The sender doesn’t know whether the document has been opened, how much time was spent reading it, whether it is being shared, or to whom. The document might still be floating around six months later, when it is out of date. They give up all control. And so we designed DocSend to address that power imbalance.

[This is the general sense of what he said, not the actual words.]

Why is this insight important? Well, imagine you’re trying to build DocSend. You go out, talk to a bunch of potential customers, interview them about how they share documents, and take notes about the good and bad aspects of their current solution. What do you do with all that information?

You could put your notes into a spreadsheet, collate them, and extract a list of the ten most-requested features. Then you could try to build a product around that top-ten list. But it would probably be an incoherent mess. It’s not impossible to succeed this way (*cough* Microsoft Office *cough*), but you’ll be working at a disadvantage. Your users will struggle to learn the product; your sales staff will struggle to explain it; your product team won’t know where to focus.

Once you’ve conceptualized the problem as a power imbalance, everything changes. You can create a coherent set of features which directly address that imbalance. You can even come up with solutions that none of your interviewees thought to ask for, because they didn’t have enough perspective on their own situation. Your customers, salespeople, and product team will all have an easier time understanding the product and what to do with it.

AI Isn’t Even At The Starting Line

Just for kicks, I played around with GPT-4 a bit, trying to get it to generate a novel insight. The results were uniformly useless, a grab bag of ideas or relevant factors without any actual insight. (Admittedly, I didn’t try very hard to get it to do better, as I had no good ideas for how to do so.)

This failure is only to be expected; people can’t generate novel insights at the drop of a hat, either. It takes anywhere from weeks to a lifetime of work, gathering and analyzing information, looking for patterns, generating and rejecting ideas.

And that’s the rub: LLMs aren’t yet in a position to put in that extended work, because they aren’t capable of doing any sort of complex extended work. As I’ve discussed previously, they are missing prerequisites such as memory and the ability to undertake exploratory, iterative processes. As things stand today, not only are LLMs unable to answer an “insight question”, they’re not even in a position to try and fail. ChatGPT could no more derive novel, deep insights from a wealth of customer interviews than it could stack blocks: it does not have access to either modality of activity, in the one case due to lack of memory, in the other due to not being equipped with hands.

Perhaps this is the sort of thing that will emerge as models continue to scale up, and GPT-5 or Claude 3 will magically start producing deep insights on demand. But this strikes me as unlikely, for several reasons:

Most insights depend on pulling together a large amount of potentially disparate information. Current LLMs lack long-term memory, and so are physically incapable of assembling that information3.

People can’t generate deep insights in a single flash of intuition immediately upon being exposed to the relevant data4. I wouldn’t expect even a scaled-up LLM to be able to generate such insights in a single forward pass through its neural network, either. That would require a significantly superhuman ability to instantly combine deftly chosen pieces of newly presented information, to a degree that near-future LLMs seem unlikely to achieve; note that current LLMs are not even human-level at this. Hence, any AI system that is going to generate deep insights will first need to learn to undertake extended reasoning processes.

True insights are comparatively thin on the ground in LLM training data. In particular, humans generally need to go through a long process of wallowing around in the data, but this process is rarely (if ever) documented in detail. If we hope for a deep learning process to generate a circuit capable of undertaking something as difficult as generating deep insights across a wide range of subjects, we’ll likely need to give it a lot of show-your-work examples to learn from.

So, AIs will need memory and extended reasoning capabilities before they can begin to work on insight. Once those prerequisites are in place, perhaps insight comes naturally, given enough scale and enough training (though it’s not at all obvious what the training process would be). Or perhaps there are further hurdles which will only become visible once we get there.

I asked GPT-4 to explain insight from a cognitive science perspective. The result is worth a read. It mentions several aspects of insight which seem to go above and beyond what is required simply to perform extended reasoning. A partial list:

Restructuring: Insight often occurs when a problem is viewed from a different angle or when its elements are reorganized. This restructuring enables a new perspective, making a solution suddenly apparent. Cognitive scientists theorize that restructuring is a key aspect of insight problem-solving.

Constraint Relaxation: Sometimes, individuals are stuck on a problem due to self-imposed or perceived constraints. Insights often occur when these constraints are recognized and relaxed, opening up new avenues for solution.

Metacognitive Processes: Sometimes, insights occur when individuals step back to examine their own thought processes, and thereby discover a new approach to the problem.

Because insight in humans appears to require novel mechanisms, I am inclined to believe that the first LLM (or other AI model) that integrates long-term memory and is capable of extended exploration and reasoning, will still not be human level (at least, not elite human level) at generating novel insights. There will be further mechanisms to be developed.

Counterpoint: ODE Integrators for Nuclear Astrophysics

(Don’t worry, I don’t really know what “ODE Integrators for Nuclear Astrophysics” means, either. The whole thing will make sense in a moment.)

Someone I know, having read an early draft of this post, shared the following anecdote (lightly edited), in which he was blocked on a problem until ChatGPT led him to a critical idea:

I had an interesting interaction with GPT-4 the other night that felt a lot like a new insight had come to me. Arguably, GPT-4 and I generated the insight as a team, rather than GPT-4 on its own.

I spent a while talking to it about a problem in my research field (how to efficiently integrate stiff ordinary differential equations). After a few different sessions I gave up and asked it "how can I use machine learning to find new integration methods". It provided the idea of representing the space of possible arithmetic operations as an abstract syntax tree, and then using an ML method like genetic programming to search over the space of possible arithmetic trees. It was one of those "duh" moments where once it was pointed it out, it seemed like I should have been able to get there on my own (e.g. from generalizing what DeepMind did to find new matrix multiplication algorithms), but I hadn't. And it felt like a genuine insight, one that I'm pretty sure no one has published before.

To summarize:

In order to make progress on a problem in nuclear astrophysics5, he needed an efficient technique for performing a certain mathematical operation (integrating “stiff ordinary differential equations”).

He asked ChatGPT whether machine learning could be used to find a solution.

ChatGPT then provided a critical idea. In its words, “You can use abstract syntax trees or graphs to represent a sequence of operations and parameters”, and then “Use search algorithms like genetic algorithms … to explore the space of potential integrators.”

This was enough to unblock him – he now had a plausible approach for solving his problem. In fact, he was then able to get ChatGPT to explain the relevant concepts (“abstract syntax trees”, “genetic algorithms”) in more detail, and he even used the Code Interpreter module to generate working sample code.

Did ChatGPT provide “insight” here? Is it the same sort of insight I’ve been discussing?

I’m honestly not sure what to think. The LLM clearly provided a crucial idea that enabled further progress on a difficult problem. It drew a non-obvious connection between two different domains, casting the problem of finding integration methods in a new light. On the other hand, it did not provide a “succinct explanation for a complex set of facts” (quoting from earlier in this same post) – the sort of thing that I’ve argued will require memory and the ability to carry out extended thought processes.

Is what ChatGPT did here a first step on a spectrum that leads to being able to develop the theory of natural selection, or to crystalize document sharing as a problem of power imbalance? Or, since it merely pointed out that a known technique for devising efficient mathematical formulas might be applied to devise an efficient mathematical formula for integration, is that a fundamentally different, shallower sort of mental process?

Insight Is Another Milestone On The Road To Human-Level AGI

Until AIs master insight, they will have a hard time making scientific discoveries, technical inventions, or forward-thinking business decisions. Insight is crucial to many important aspects of economic activity; for instance, the full anticipated potential of AI self-improvement may not be realized until they are able to contribute novel insights into their own design.

Perhaps a shortcut will emerge. Just as AIs tackled chess without acquiring anything like human reasoning ability, perhaps they will tackle insight without needing the underlying building blocks that humans use. But I don’t think this will happen anytime soon, the example regarding nuclear astrophysics notwithstanding. Current LLMs are achieving many remarkable things, but none of those things appear to border on the generation of novel scientific insights. As I’ve discussed, I don’t think this will be possible until other fundamental advances in AI design have been made, such as tightly-integrated long-term memory.

It’s worth noting that when I asked GPT-4 to define insight and summarize what cognitive science has to say on the subject (linked above), it provided an excellent response: on point, concise; one might even say, insightful. However, these were not novel insights; certainly they were not based on novel data. It’s well known that LLMs can be quite articulate when working with data in their training set, presumably through some combination of cribbing from other people’s analyses, and a deep assimilation of the training data during the training process. Given current deep learning architectures, none of this applies when we ask for novel insights based on novel data.

Thanks to Russ Heddleston for supplying the idea that sparked this post.

Original transcript here. Note that I am using the following custom instructions, courtesy of Jeremy Howard:

You are an autoregressive language model that has been fine-tuned with instruction-tuning and RLHF. You carefully provide accurate, factual, thoughtful, nuanced answers, and are brilliant at reasoning. If you think there might not be a correct answer, you say so. Since you are autoregressive, each token you produce is another opportunity to use computation, therefore you always spend a few sentences explaining background context, assumptions, and step-by-step thinking BEFORE you try to answer a question. Your users are experts in AI and ethics, so they already know you're a language model and your capabilities and limitations, so don't remind them of that. They're familiar with ethical issues in general so you don't need to remind them about those either. Don't be verbose in your answers, but do provide details and examples where it might help the explanation.

This conversation took place while hiking the Enchantments, an astoundingly gorgeous 20 mile hike in Washington State. Over the course of 20 miles, it’s difficult to avoid getting into some interesting topics. But I highly recommend even a short walk as a great way to have a deep 1:1 conversation.

One could argue that this can be handled by simply dumping all of the relevant information into a very large token buffer, or by bolting a vector database (or other mechanism for retrieving external data) into an LLM. I address this in a post I’ve already linked to a couple of times here: Why We Won't Achieve AGI Until Memory Is A Core Architectural Component.

At least, not often; the exceptions, I suspect, are probably cases where someone has already done the background mental work to generate the insight, and simply hadn’t articulated it yet.

I think “nuclear astrophysics” is where you go if you’re looking for something that’s more rocket-sciencey than actual rocket science.

Unlike your other posts in this series, the combination of repeatedly quoting GPT-4, in addition to the nuclear astrophysicist's example, has me feeling that your assertion of insight being a long way off for AI has a "whistling past the graveyard" feel to it. Logically, reading your post, I see that isn't the case-- I understand your arguments and why GPT-5 or equivalents will very likely lack what is needed to generate any real insights (long term memory, etc.)-- but somehow the whole post makes me feel like I'm reading what the Romans said to each other in explaining why the Huns were certainly not powerful or organized enough to sack Rome anytime soon. An irrational response, I grant you, but there it is.

i read this as "the mythical man moth" and was very excited to learn how he explained programming delays